Recently Google issued a “code red” over ChatGPT’s latent threat to its search business and the possibility of total disruption. Testing companies also sounded the alarm — no quality assurance is needed anymore. But let’s be realistic; that is irrelevant for QA engineers. Firstly, ChatGPT for software testing is another great tool for better and faster QA, but not a total substitute. Secondly, it’s not always available (unlike your QA engineer who shows up and does things in a bug reporting tool every day), and the pricing is still uncertain.

ChatGPT definition

I guess it’s quite obvious that ChatGPT is artificial intelligence. But what actually is it? Here is the definition of ChatGPT given by itself:

“ChatGPT is a language model developed by OpenAI, an artificial intelligence research laboratory. It is based on the transformer architecture and is trained on a large corpus of text data from the internet.

ChatGPT can generate human-like text based on the input provided, making it capable of performing a wide range of language-related tasks such as text completion, question answering, and text generation.

It has been fine-tuned on specific tasks, such as answering customer service queries and providing responses to users in a conversational context, making it a popular tool for building chatbots and other conversational AI applications.”

ChatGPT became available for mass use in November 2022. Developed by OpenAI,this chatbot made a huge buzz in the IT world. A lot of specialists found this tool a pretty good “partner in crime”. It helps accomplish certain tasks faster, like writing simple code lines for developers, verifying information and fact-checking for journalists, and writing original copy for marketers. Some tech people also got pretty gloomy for the same reason: wouldn’t ChatGPT also jeopardise their jobs?

No matter the attitude, employees should exercise caution when utilising AI tools as they may be susceptible to spreading false information and removing the personal touch from tasks such as writing. Despite its increasing use, many organisations have yet to establish clear guidelines for employee utilisation of AI technology.

Then there is Microsoft. As the biggest partner and investor of Open AI (the parent company of ChatGPT), Microsoft have recently authorised their employees to utilise the chatbot for work purposes as long as confidential information is not shared.

We also made an in-house GPT tool that we happily share with others. aqua’s AI Сopilot creates entire test cases from scratch, completes test drafts, and helps you prioritise the QA effort. Unlike with utilising ChatGPT for testing, our solution uses the context of your software and its test suite to make highly personalised suggestions.

Test smarter, not harder: try our AI-powered testing tool today

Cases of using ChatGPT for QA testing

ChatGPT can be used to generate test cases for various applications, reducing the time and effort required for manual test case creation. ChatGPT is praised for QA purposes because of the speed above all. The request to write a simple test case usually takes less than a minute to complete. But are there any other cases where ChatGPT for testers is a viable choice?

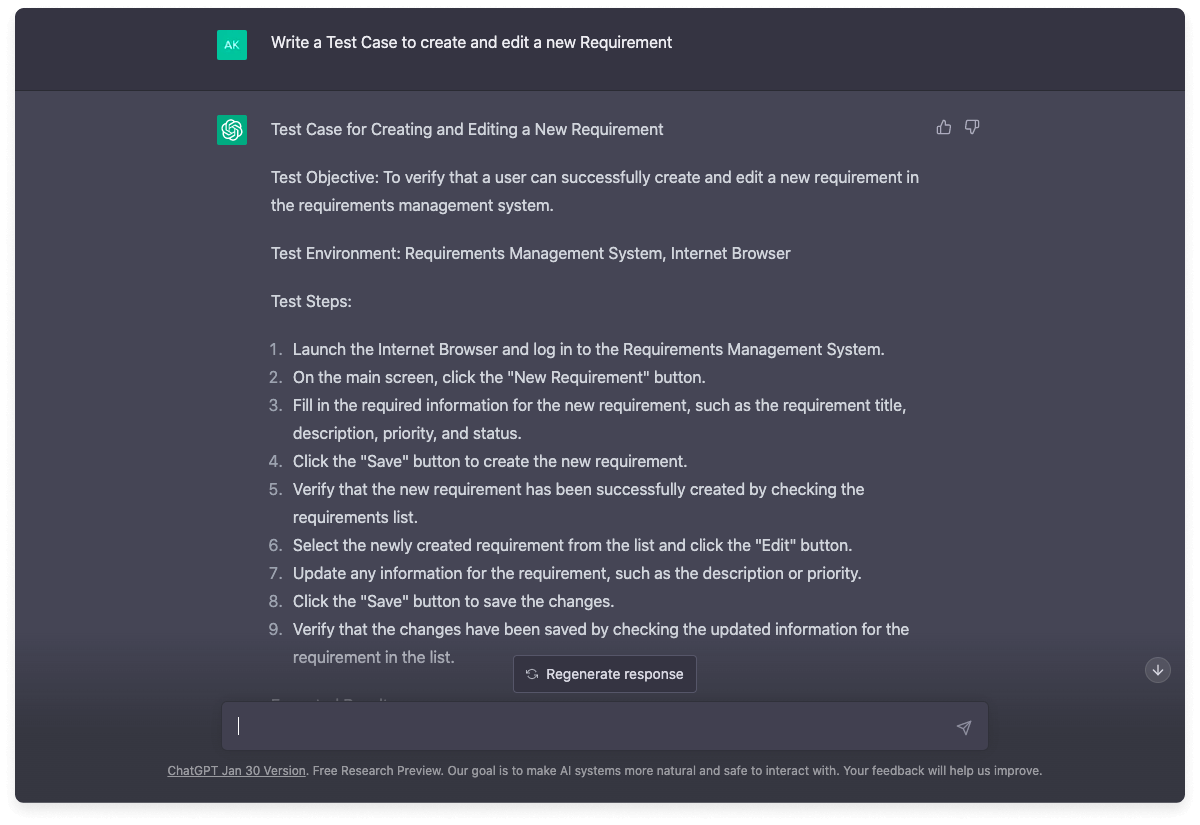

Write a Test Case to create and edit a new Requirement

Test Case for Creating and Editing a New Requirement

Test Objective: To verify that a user can successfully create and edit a new requirement in the requirements management system.

Test Environment: Requirements Management System, Internet Browser

Test Steps:

- Launch the Internet Browser and log in to the Requirements Management System.

- On the main screen, click the New Requirement button.

- Fill in the required information for the new requirement, such as the requirement title, description, priority, and status.

- Click the Save button to create the new requirement.

- Verify that the new requirement has been successfully created by checking the requirements list.

- Select the newly created requirement from the list and click the Edit button.

- Update any information for the requirement, such as the description or priority.

- Click the Save button to save the changes.

- Verify that the changes have been saved by checking the updated information for the requirement in the list.

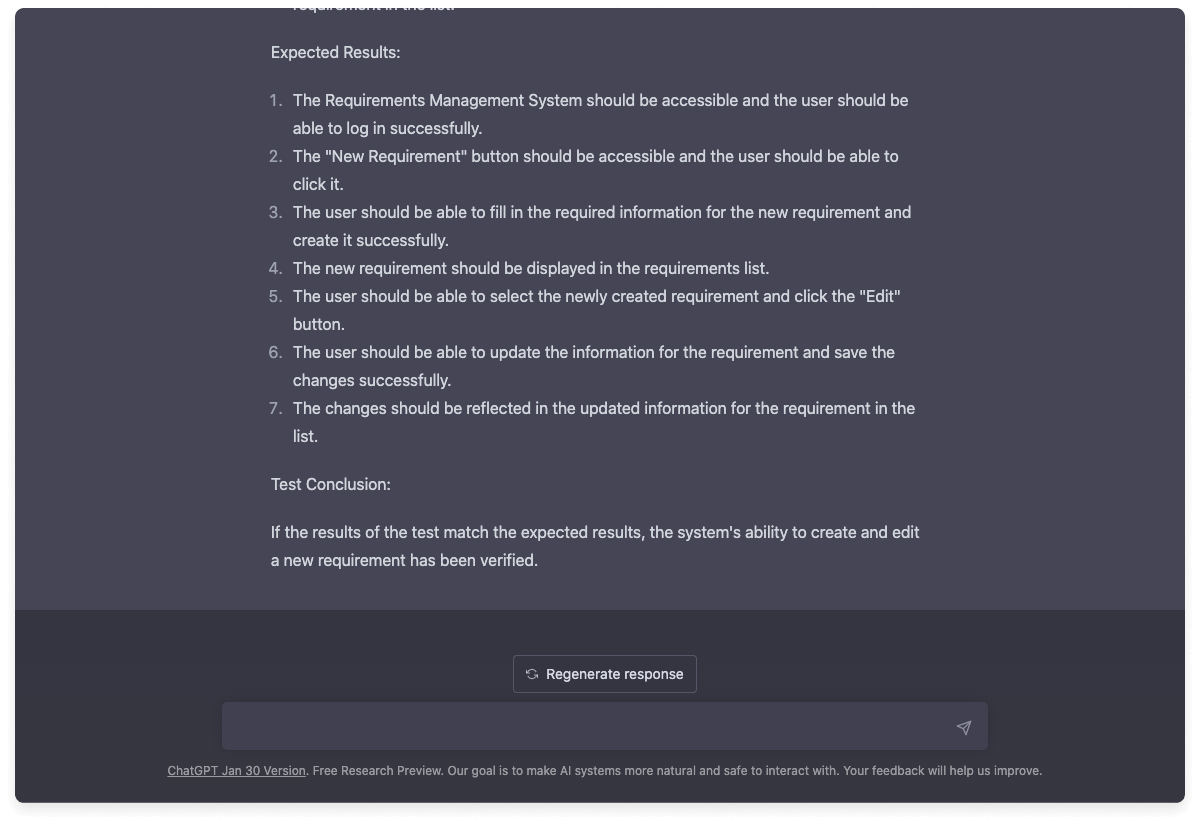

Expected Results:

- The Requirements Management System should be accessible and the user should be able to log in successfully.

- The New Requirement button should be accessible and the user should be able to click it.

- The user should be able to fill in the required information for the new requirement and create it successfully.

- The new requirement should be displayed in the requirements list.

- The user should be able to select the newly created requirement and click the Edit button.

- The user should be able to update the information for the requirement and save the changes.

- The changes should be reflected in the updated information for the requirement in the list.

Test Conclusion:

If the results of the test match the expected results, the system’s ability to create and edit a new requirement has been verified.

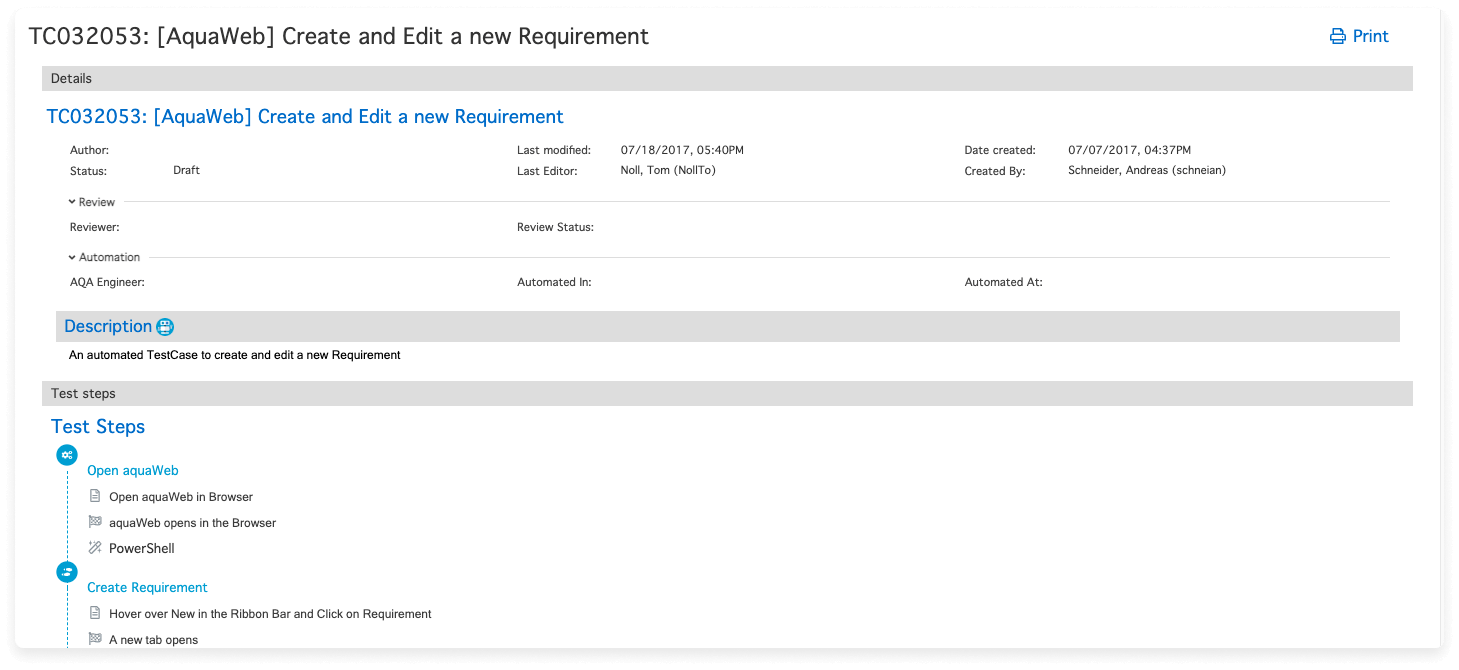

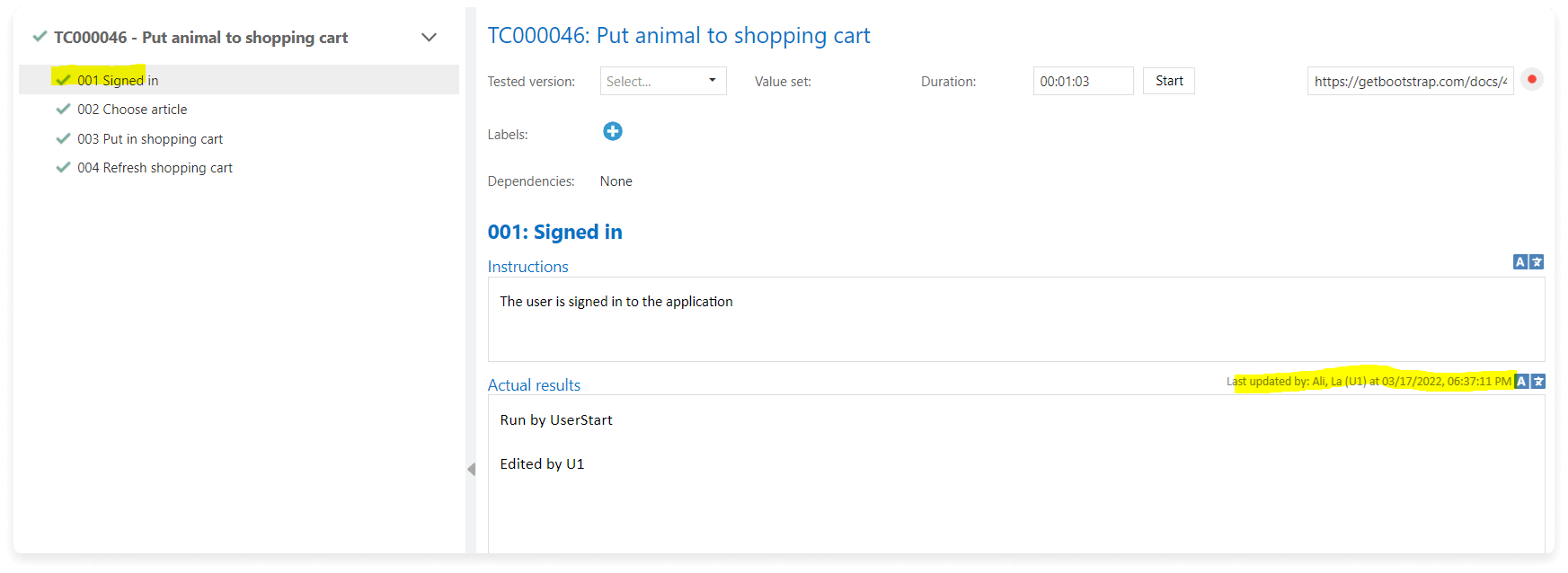

Here is the same test case created by our tester manually in aqua:

Open aquaWeb

- Open aquaWeb in Browser

- aquaWeb opens in the Browser

- PowerShell

Create Requirement

- Hover over New in the Ribbon Bar and Click on Requirement

- A new tab opens

Name

- Fill in the Name of the Requirement

- the Name fills

Save & Close

- Click on Save and Close in the Ribbon Bar

- You are redirected to the Navigation view

Check

- Refresh the view and search the requirement in the list

- The item appears in the list

Open Requirement

- Open the Requirement with a double Click

- The requirement opens in a new tab

Edit Requirement

- Change some values in the view

- The values can be selected and changed

Save & Close

- Click on Save & Close in the Ribbon Bar

Reopen the Requirement

- Reopen the Requirement and check if the changed values are the same you changed them to the values are the same

Edit description

- Click in the description and type some text

- The text is displayed in the editor

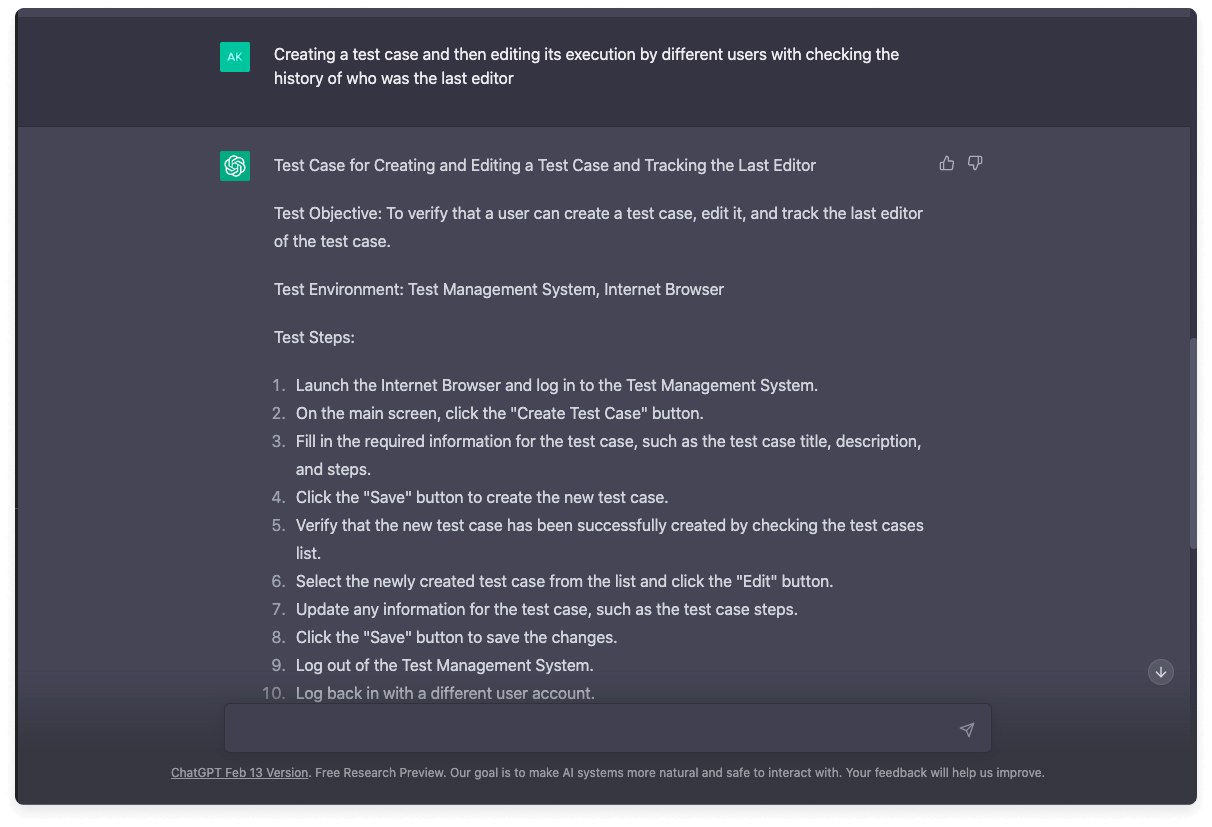

We tried another request to see how ChatGPT will cope a more complicated test scenario:

Test Case for Creating and Editing a Test Case and Tracking the Last Editor

Test Objective: To verify that a user can create a test case, edit it, and track the last editor of the test case.

Test Environment: Test Management System, Internet Browser

Test Steps:

- Launch the Internet Browser and log in to the Test Management System.

- On the main screen, click the “Create Test Case” button.

- Fill in the required information for the test case, such as the test case title, description, and steps.

- Click the “Save” button to create the new test case.

- Verify that the new test case has been successfully created by checking the test cases list.

- Select the newly created test case from the list and click the “Edit” button.

- Update any information for the test case, such as the test case steps.

- Click the “Save” button to save the changes.

- Log out of the Test Management System.

- Log back in with a different user account.

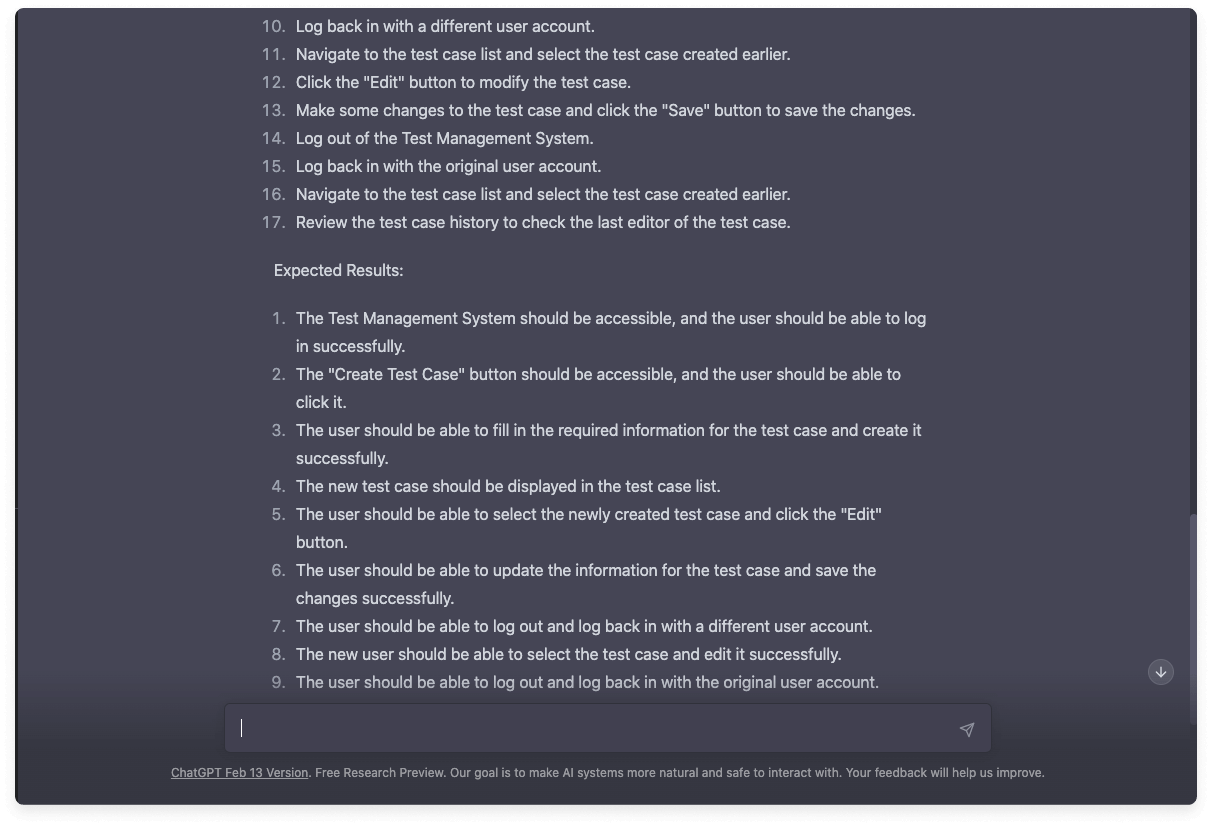

- Navigate to the test case list and select the test case created earlier.

- Click the “Edit” button to modify the test case.

- Make some changes to the test case and click the “Save” button to save the changes.

- Log out of the Test Management System.

- Log back in with the original user account.

- Navigate to the test case list and select the test case created earlier.

- Review the test case history to check the last editor of the test case.

Expected Results:

- The Test Management System should be accessible, and the user should be able to log in successfully.

- The “Create Test Case” button should be accessible, and the user should be able to click it.

- The user should be able to fill in the required information for the test case and create it successfully.

- The new test case should be displayed in the test case list.

- The user should be able to select the newly created test case and click the “Edit” button.

- The user should be able to update the information for the test case and save the changes successfully.

- The user should be able to log out and log back in with a different user account.

- The new user should be able to select the test case and edit it successfully.

- The user should be able to log out and log back in with the original user account.

- The original user should be able to review the test case history and see the last editor of the test case.

Test Conclusion:

If the results of the test match the expected results, the system’s ability to create and edit a test case and track the last editor has been verified.

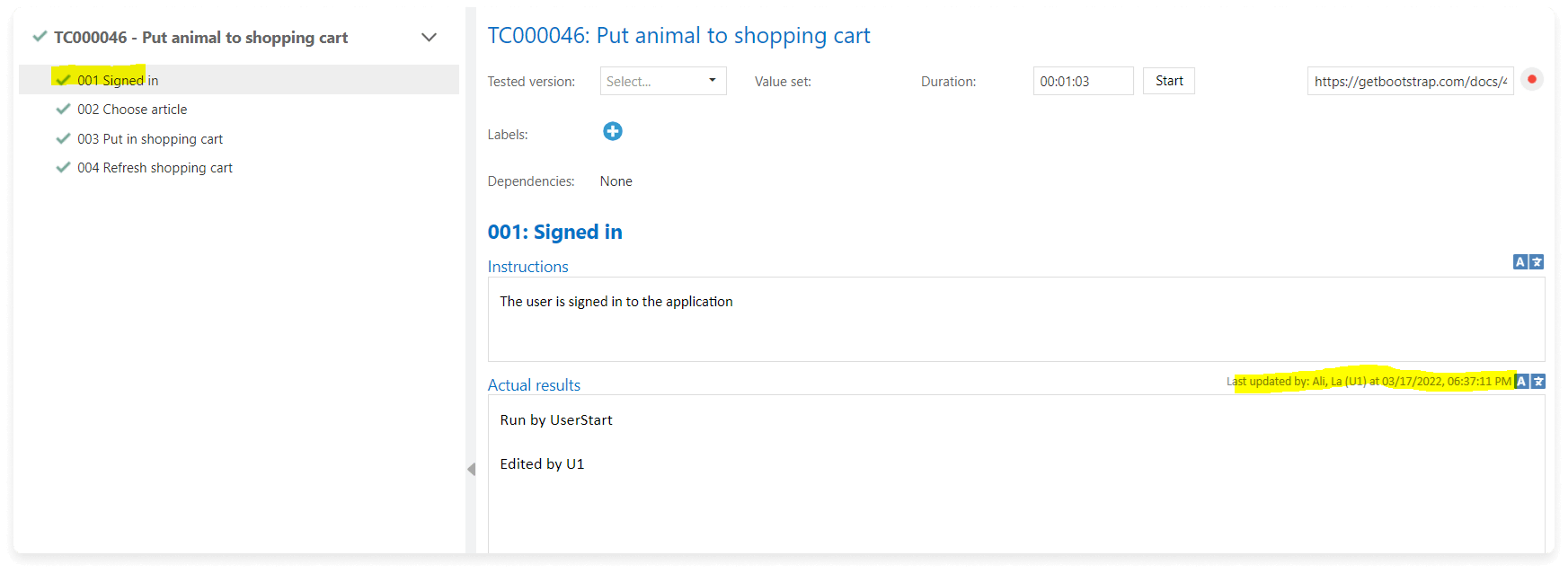

And here the same test case executed in aqua:

Test Steps

- Precondition 1 – Existing Users

- Following users exist:

- UserA

- U1

- U2

- All users have access to a TestprojectX in aqua with the Project Administrator role

- Precondition 3 – Existing test execution

- A test case exists that was executed by UserA and for each step a text is provided in the field “Actual result”

Login of U1

- U1 provided its username and email

- Click on Login

- Login to U1 was successful.

- Edit by U1

- U1:

- Click on Navigation and Select the project mentioned in the precondition.

- Open the TestcaseY, click on “Executions”

- Select the execution performed by UserA and click on “Continue”.

- Edit the actual result of step 1

- Click Save and Close

- The test execution was updated.

- The changes were saved and closed.

Logout U1

- U1 logs out of aqua

- Logout from U1 was successful.

Login of U2

- U2 provided its username and email

- Click on Login

- Login to U2 was successful.

- Edit by U2

- U2:

- Click on Navigation and Select the project mentioned in the precondition.

- Open the TestcaseY, click on executions

- Select the execution performed by UserA and Click on Continue.

- Edit the actual result of step 2

- click save and close

- The test execution was updated.

- The changes were saved and closed.

Logout U2

- U2 logs out of aqua

- Logout of u2 was successful.

Login of UserA

- UserA provided their username and email

- Click on Login

- Login to UserA was successful.

- UserA: Check change history

- UserA:

- Open the TestcaseY mentioned in the precondition.

- Click on Executions.

- Open the last execution

- Check if a history entry is shown only for the edited steps

- It shows the entry only on the step that was edited, others are not affected and have no history entry.

Step1 was edited by U1:

Step2 was edited by U2:

"ChatGPT executed the task perfectly taking into account that input data was limited to a short request sent into a ChatGPT window. However, the lack of context makes this test case too general: it does not cover individual company requirements."

Take the guesswork out of testing with aqua’s AI tool

Testing Chatbots

ChatGPT can be used to test the accuracy and effectiveness of chatbots by generating test inputs and evaluating the outputs. This can help identify and fix issues with chatbots before they reach end-users.

Improving Natural Language Processing (NLP) Models

Natural Language Processing (NLP) is a field of computer science that deals with enabling computer programs to understand and interpret human language, both written and spoken, in a manner that resembles human comprehension.

ChatGPT can be used to evaluate and improve NLP models by generating test inputs and evaluating the model’s ability to accurately process and understand natural language.

Sentiment Analysis Testing

Sentiment Analysis, also known as Opinion Mining, is a branch of Natural Language Processing (NLP) that focuses on determining the sentiment expressed in a given piece of text. It aims to categorise the sentiment expressed as positive, negative, or neutral. This technique is widely used by businesses to gauge customer sentiment towards their brand and products by analysing feedback, enabling them to gain valuable insights and better understand the needs of their customers.

ChatGPT can be used to generate test inputs for sentiment analysis models, helping to evaluate their accuracy and identify areas for improvement.

Testing Voice Assistant Applications

To improve the accuracy of a Voice Assistant model, companies need to get a vast amount of data by recording speech samples to train the voice recognition system, making it more accurate and natural for users. This follows another problem — how to test it when there is so much data. And ChatGPT can partially help by generating test inputs for voice assistant applications or evaluating their ability to recognise and process spoken commands accurately.

These are just a few examples of voice assistant and IoT applications that can all be tested and enhanced with extra test data:

- Virtual Personal Assistants (Google, Siri)

- Smart Home Devices (smart speakers, lights control, thermostats)

- Automotive Infotainment Systems

- Healthcare Devices (wearable fitness trackers, smart scales, glucose monitors, TV and Media Devices)

However, this technology is continually evolving and being integrated into new devices and products. In order to bring their models to perfection, developers outsource tasks to a global community of users, who are paid for their work to collect enough data. But this approach is a time- and money-consuming process, while ChatGPT can provide sample data faster with less effort. This also boosts (inherently flawed) security by obscurity and eliminates ethical concerns for using underpaid labour from developing countries to train AI models used in voice assistants.

Our team has prepared an overview of AI testing trends to cover what is possible with ChatGPT and beyond. The ebook also includes a comparison of test management solutions with AI functionality to help you put insights to good use.

Learn the 5 AI testing trends to save 12.8 hrs/week per specialist

Future of testing with AI

The future of testing with AI holds a lot of potential for improving the efficiency and accuracy of the testing process. With AI’s ability to analyse large amounts of data, automate repetitive tasks, and make predictions, it has the potential to revolutionise the way software is tested. Here are a few ways AI is expected to impact testing in the future:

- Predictive Testing: AI algorithms will be able to analyse software code and predict where bugs are likely to occur, allowing testers to focus their efforts more effectively.

- Automated Testing: AI can automate many of the manual tasks associated with testing, such as test case execution, regression testing, and bug triage. This will free up testers to focus on more complex and creative tasks. Using ChatGPT for test automation does not reflect the potential: QA-minded GPT solutions will be much better.

- Continuous Testing: AI can monitor software applications in real-time and perform tests automatically, enabling teams to catch bugs and issues sooner and reduce the time required to test changes.

- Improved Test Coverage: AI can analyse software to identify under-tested areas and recommend tests that should be added to improve coverage.

The use of AI in testing is expected to greatly improve the efficiency and accuracy of the testing process, allowing teams to focus on more complex tasks and deliver high-quality software faster.

Conclusion

Slide rules were used by engineers and other mathematically involved professionals for centuries before the invention of the pocket calculator. However, the introduction of calculators did not make these professionals obsolete. Instead, it made their work faster, more accurate, and more efficient. Similarly, AI tools can assist QA engineers in performing their tasks more effectively and efficiently, without necessarily replacing them.

The use of AI tools brings benefits of automating testing for tasks that are repetitive and time-consuming, such as regression testing. QA engineers get to focus on higher-level testing activities that require human creativity, intuition, and problem-solving skills. These tools can help identify patterns, analyse data, and generate insights that can be challenging to detect manually. They also reduce the risk of human error, which can be especially important in safety-critical applications.

Summing up, you shouldn’t hope to replace humans by AI testing but you can’t dismiss it either. QA engineers must be open to embracing these tools and adapting their skill sets to take advantage of them. By leveraging AI tools, QA engineers can improve their work, provide more value to their organisations, and ultimately enhance the quality of the software they deliver.

Reduce clicks by testing with AI — get more time for improvement