Start testing early

You don’t need to wait until everything’s built to spot performance issues. Testing components and APIs right from day one can help you catch roughly 80% of bottlenecks before they snowball into bigger headaches. You also need to set up automated performance checks in your CI/CD pipeline that scream when response times jump more than 20%. Your developers will thank you later when they’re not scrambling to fix massive slowdowns at 2 AM before launch.

Start stupidly small – even a basic load test on your main API endpoint beats discovering your app crashes under real traffic.

The consequences of late testing are exacerbated if you are working on a live service product. Whether it is a marketplace or a booming video game, you usually won’t have the resources to add features and rewrite a flawed solution at the same time. It is hard to achieve both even if you are prioritising growth over profits at the moment.

Here’s what a former software engineer of Twitter had to say about the performance of the company’s Android app:

‘I think there are three reasons the app is slow. First, it’s bloated with features that get little usage. Second, we have accumulated years of tech debt as we have traded velocity and features over performance. Third, we spend a lot of time waiting for network responses.

Frankly, we should probably prioritise some big rewrites to combat 10+ years of tech debt and make a call on deleting features aggressively.‘

If this Twitter saga has taught us anything, it’s this. Don’t leave testing to the last minute, and you will avoid decade-long performance bottlenecks plus all drama that comes from it.

Other key practices of performance testing were mentioned in the video.

Pick your metrics

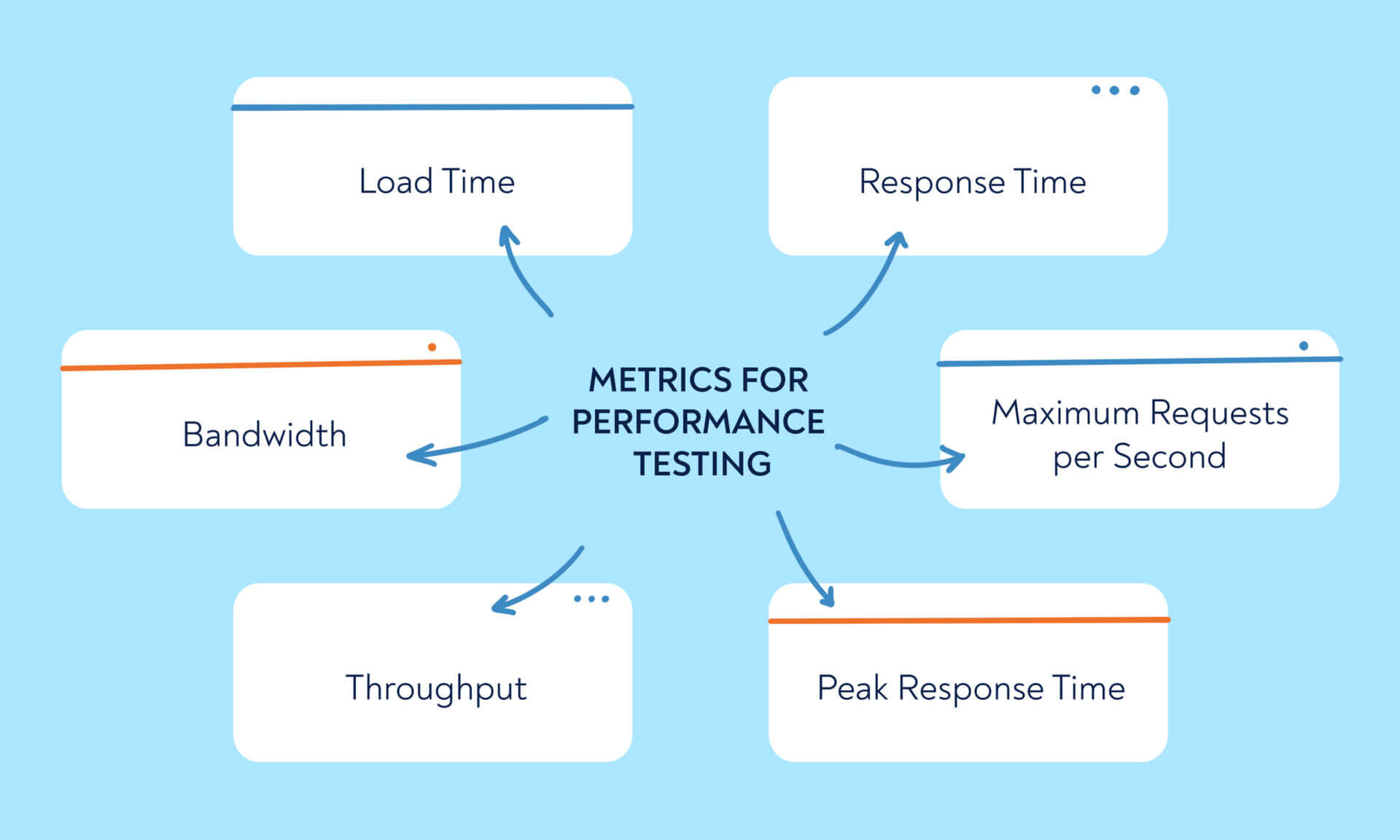

On the surface, it may be easy to tell whether your app works well or not. If it loads things fast and doesn’t crash, it’s good to go. A software performance testing strategy, however, has more nuance and thus needs more detail especially for testing SaaS applications.

You’ll want to track the metrics that actually matter — load times, response percentiles (90th, 95th, 99th), plus user-focused ones like Apdex, FCP, LCP, and TTI. These directly measure real user experience.

Don’t ignore the system side either. CPU usage, memory consumption, and error rates pinpoint exactly where things break down. Those response time percentiles reveal the worst user experiences hiding in your data.

Start with your 95th percentile response times — if they’re over 2 seconds, nearly half your users are getting frustrated. Frontend metrics like LCP now directly impact your Google rankings, so you’re not just optimising for users anymore.

Track throughput alongside these quality metrics. It’s not enough to handle volume if your app crawls doing it.

Stability metrics are important to include even if you are not expecting a massive number of visitors. Maximum Requests per Second, Peak Response Time, Throughput, and Bandwidth are all important indicators. Naturally, Uptime is arguably the most important metric even if you’re running an online flower shop with 10 daily visitors.

Build a testing software suite

It’s not just different metrics that you will have to juggle. There are 6 primary types of performance testing, and you may need more than just one solution to nail performance testing. JMeter is an amazing tool for load testing, but ReadyAPI will be just as important if you are making an API performance testing strategy.

Another important consideration is aligning performance testing with other parts of your QA software package. If your company uses Selenium for test automation, you might as well automate performance testing with a Selenium-based solution. It’s the same with the low-code/no-code solution if you are using one.

You will also greatly benefit from a single solution to orchestrate all these different tests. Our advice is to have a look at existing QA infrastructure, pick an enterprise performance testing tool, and find an integration-friendly test management solution to govern all the tools.

To maximise the power of performance testing, you need a solution that does more than just run tests—it should elevate your entire testing strategy. What if we told you there is a Test Management Solution (TMS) that does it all with pinch-perfect German quality and 20 years of experience in the market?

We are talking about aqua cloud. Going above and beyond performance testing tools like JMeter or ReadyAPI, aqua cloud centralises all your test cases, making your testing process more efficient and seamless across different types of tests. With customisable KPI alerts, you’ll always know when a performance benchmark is missed, and detailed reports give you clear insights that speed up decision-making. aqua cloud isn’t just for performance testing; it’s here boost your entire QA process—helping you manage functional, security, and compatibility tests all in one place. With aqua; you’re achieving 100% traceability, automated workflows, and full integration with your CI/CD pipeline.

Never miss a performance benchmark ever again: rely on an AI-powered TMS

Embrace Automation and AI in Performance Testing

Automation and AI have shifted from ‘nice-to-have’ features to absolute must-haves in performance testing. When you plug automated performance tests directly into your CI/CD pipeline, every code change gets checked against your benchmarks automatically – no more surprises in production.

But here’s where it gets interesting – AI takes this further by spotting patterns you’d miss. It analyses your test history, flags potential bottlenecks before they blow up, and suggests specific areas that need attention based on what’s broken before. Using AI-powered testing can catch nearly 40% more performance issues during development.

Start simple: pick one critical user flow and automate its performance test first. Tools like aqua cloud now handle the heavy lifting – centralising your data, sending smart alerts, and translating complex metrics into actionable insights your whole team can understand. This way, your QA process becomes predictive rather than reactive, and you spend time fixing real problems instead of hunting for them.

Organise your tests

It gets confusing to manage tests from multiple tools, but even one solution can get messy real fast. You need to establish good naming conventions, define the structure for test cases, and make sure that your team sticks to it.

A good structure extends beyond test cases. You can organise them into test scenarios, establish dependencies, and improve your bug reporting culture. Making sure that all functional requirements are covered by performance tests is a natural goal that you should still keep track of.

Establishing a good routine is just one half of the equation: you need to follow it as well. You can start by regularly bringing up any protocol-related issues in retrospective meetings. Using test management solutions with workflow functionality is a great way to ease the transition and future onboarding.

Ask your users

The flip side of good metrics is that performance testing can get too numbers-driven. If you look at nothing but milliseconds, it is easy to forget their real impact on the end-user. It is amazing that your users can quickly pick the size of the shoes they are about to order, but they had most likely filtered by size in the first place. When working with limited resources, it may be better to drive the performance testing effort and developer’s time for optimisation elsewhere.

You may also consider studying heatmaps and/or entire sessions of users that both brought you new business and left without a purchase. Looking at the buying process in a busy season, you will probably find that users who made it to the checkout will likely complete their order. Looking at limited QA resources and server capacity, it is better to make sure that choosing products is smooth enough even at peak load.

Avoiding Common Performance Testing Pitfalls

You can’t build a solid testing strategy without knowing where things typically go sideways. Here’s what trips up most teams and how you can sidestep these traps.

First up: don’t get fooled by average response times. They’re sneaky little liars that hide the awful experiences some users actually face. Always check your 95th percentile response times alongside averages. If your average is 2 seconds but your 95th percentile hits 8 seconds, you’ve got a problem affecting real people.

Testing in fantasy environments is another classic mistake. Your laptop setup won’t tell you much about production reality. Quick win here; replicate your actual network conditions and server specs as closely as possible, even if it means scaling down proportionally.

Fuzzy performance requirements will leave your team guessing what ‘fast enough’ actually means. Pin down specific thresholds before you start testing.

Time matters more than you’d expect. Real users pause, read, and scroll around a bit. If you skip these natural delays in your test scripts, you’ll accidentally hammer your system with unrealistic load patterns.

One last thing — always re-test your fixes. Nearly half of the performance ‘solutions’ don’t actually solve the root issue, and you won’t know unless you verify the improvements are stuck.

Final thoughts

Our list of best practices for creating performance testing strategy ended up covering more than actual testing. After all, you need to establish good processes and keep the end-user in mind no matter which type of testing you run. Adjust these tips to your team and make top performance your trademark.

And to get the most out of your performance testing, you need a solution that can centralise, streamline, and optimise every part of your testing process – a solution that will take the pain of testing away. And this solution is right at your fingertips, called aqua cloud.

Here’s why you need aqua cloud in your toolkit:

- Outstanding AI-powered features to speed up testing and reduce manual work.

- Seamless integration with your automation and project management frameworks, including Jira, Jenkins, Ranorex, UnixShell, and Azure DevOps.

- Custom KPI alerts to stay on top of performance issues.

- Clear, customisable reports for fast decision-making.

- 100% traceability to control every step of your QA process.

Go beyond just performance testing, transform 100% of your testing efforts with aqua cloud