Key Takeaways

- Requirements analysis transforms vague stakeholder needs into clear, testable, and traceable system specifications that prevent miscommunication and rework.

- The process distinguishes between functional requirements (what the system does) and non-functional requirements (how well it performs) to ensure complete coverage.

- Poor requirements management directly leads to project failures, with PMI and CHAOS reports consistently citing unclear requirements as a top failure factor.

- Event Storming, Example Mapping, and BDD techniques create lightweight, collaborative ways to continuously discover and refine requirements.

- Requirements traceability links specifications to design, code, tests, and releases, enabling impact analysis and preventing scope creep.

Requirements analysis turns vague inquiries into testable and traceable specifications. See how to run this critical process without creating any unnecessary blockers👇

What is Requirement Analysis?

Requirement analysis is the process of turning stakeholder needs into clear, testable, and traceable system requirements. These needs come from users, product managers, compliance teams, or your own experience. What is requirement analysis in software engineering? It translates vague requests into precise, verifiable specifications:

| Vague Stakeholder Request |

Clear, Testable Requirement |

| “We need a faster login” |

Authentication must complete within 1.5 seconds at the 95th percentile for 10,000 concurrent users |

| “The app should be secure” |

All API endpoints must implement OAuth 2.0 authentication and rate limiting of 100 requests per minute per user |

| “Make the dashboard user-friendly” |

Users must be able to access their top 3 metrics within 2 clicks from the homepage, with no training required |

| “We need better search” |

Search results must return within 500ms for queries up to 50 characters, ranked by relevance score, displaying top 20 results |

| “The system should scale” |

Infrastructure must handle 50,000 concurrent users with <2% error rate and auto-scale between 5-50 instances based on CPU threshold of 70% |

Standards like ISO/IEC/IEEE 29148 formalize this approach. Software requirement analysis has defined inputs, e.g., stakeholder needs, constraints, and context. It also contains outputs like specified requirements, and traceability links, and a lifecycle process that keeps requirements honest from kickoff through deployment.

The requirement analysis definition includes two main types of requirements:

- Functional requirements. They describe what the system does. These are the features, behaviors, and interactions users care about. For example: “Users can reset their password via email link” or “The system shall export reports in CSV and PDF formats.” These requirements answer “what happens when?” and directly shape your user stories, acceptance tests, and feature scope.

- Non-functional requirements (NFRs). Non-functional requirements specify how well the system performs those functions. Think performance, security, reliability, accessibility, and scalability. An NFR might read:

- “API response time ≤ 300 ms under 2,000 requests per second”

- “The app must meet WCAG 2.1 AA standards.”

NFRs are just as testable as functional requirements. They just need measurable fit criteria and explicit verification methods, such as load tests or accessibility audits. Both types shape your design, your test strategy, and your definition of done.

Modern frameworks from INCOSE’s Guide to Writing Requirements to ISTQB v4 emphasize verifiable wording and traceability. When you know exactly what you’re building and how you’ll prove it works, you’ve got a solid foundation.

Requirements analysis can feel like a meticulous process. Yet, having the right tools makes all the difference.

Aqua cloud, a requirement and [test case management]*https://aqua-cloud.io/test-case-management-guide/)platform, makes requirement analysis your strategic advantage. With its end-to-end traceability features, you can directly link requirements to test cases, test scenarios, and defects, this way eliminating coverage gaps that lead to costly rework. aqua’s domain-trained AI Copilot automatically generates high-quality test cases from your requirements in seconds, with approximately 42% requiring no edits at all. The platform’s visual coverage and dependency mapping give your team immediate insight into relationships between requirements and test assets. aqua seamlessly integrates with your existing workflow through native connections to Jira, Azure DevOps, GitHub, Jenkins, and other development tools via REST API. This keeps requirements synchronized across your entire tech stack without manual updates.

Save up to 12.8 hours per tester each week with aqua's AI-powered requirements management.

Why is Requirement Analysis Important in Software Development?

Requirements analysis document keeps your project from collapsing mid-sprint. When you ask: “what is requirements analysis?” understand that it is a critical process. Skipping or rushing it invites miscommunication, scope creep, budget overruns, and endless rework tickets.

PMI’s 2025 Pulse of the Profession report links poor requirements directly to project performance issues. Updated CHAOS studies list unclear requirements among the top causes of project failure.

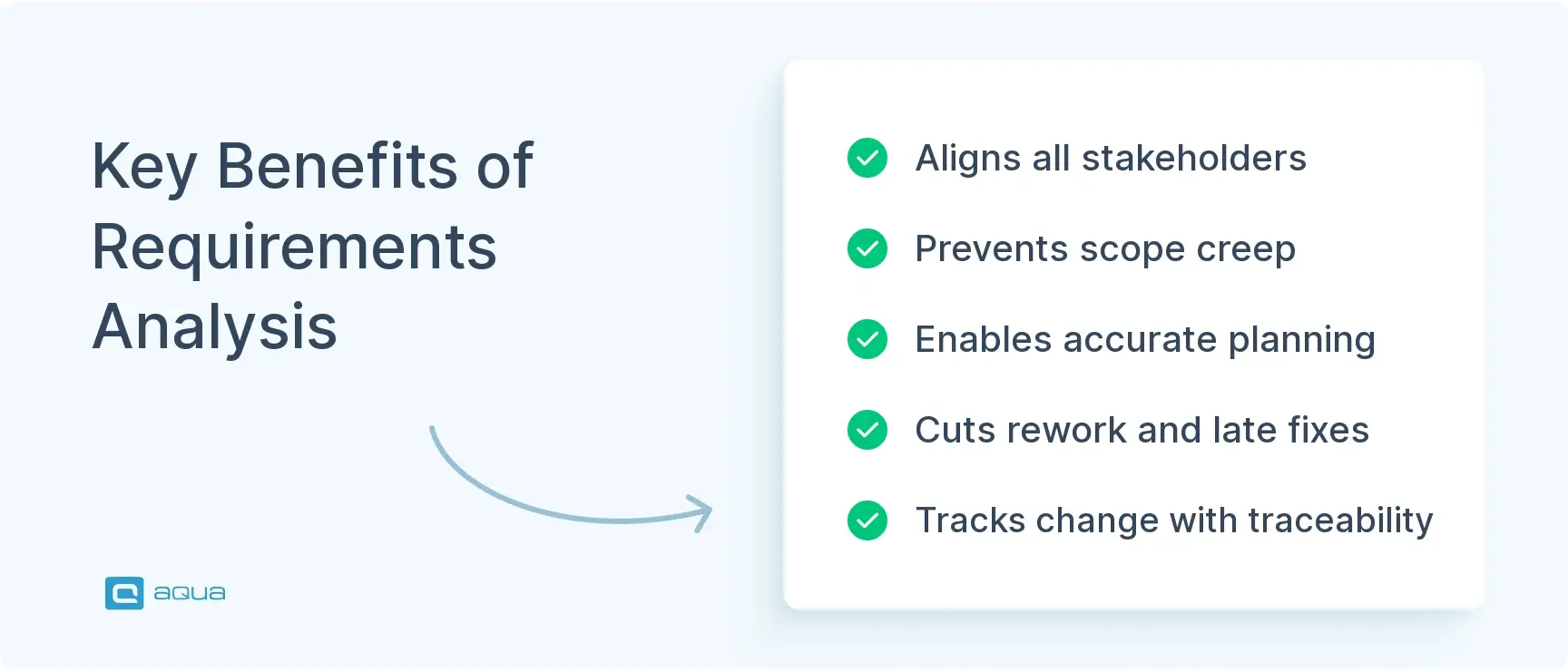

Here’s why requirements analysis techniques pay off sprint after sprint:

- Ensures stakeholder alignment. You’ve been in that meeting where product says: “but I thought we agreed on X” and engineering insists it was Y. When everyone signs off on the same specs upfront, those arguments disappear.

- Prevents scope creep. You know what’s in this sprint, what’s deferred, and what’s out of scope. No more surprise feature requests sneaking in through Slack DMs.

- Facilitates better planning and estimation. You can actually size work accurately. You spot dependencies early. You catch risks before anyone writes code.

- Reduces rework and late defects. Fixing a vague requirement in a planning session takes minutes. Fixing it after deployment costs weeks and damages trust.

- Enables traceability and compliance. When requirements change, you know exactly which tests, code, and releases are affected. One query shows your full impact and coverage.

Neglecting requirements analysis costs you in time through constant clarifications, in budget through expensive fixes, and in trust when “done” keeps changing. If you treat it as continuous discovery, with weekly touchpoints and living documentation, you ship faster and with fewer blockers.

In my perspective I would suggest to have clarity on requirements, taking sign-off for the requirements is necessary else it will be difficult to decide the exit criteria for the sprints.

Key Steps of Requirement Analysis Process

Let’s walk through a real-world requirement analysis example: building a mobile shopping app. Your stakeholders want “a smooth checkout experience,” but that’s too vague to test. Here’s how the requirement analysis process turns that fuzzy ask into a concrete spec you can build, validate, and deploy.

Step 1: Frame outcomes and scope. Start by anchoring on measurable outcomes. What does “smooth checkout” actually achieve? Define your context, constraints, and stakeholders. Establish a shared vocabulary early. For example: “Checkout” means from cart review through order confirmation. “Payment method” includes saved cards, Apple Pay, Google Pay, and guest checkout. This framing prevents interpretation drift before you write a single line of code.

Step 2: Elicit collaboratively. Don’t wait for a quarterly workshop. Run weekly touchpoints with customers, support, and internal users. Use interviews, analytics reviews, and quick experiments to surface real pain points. You might discover users abandon checkout when they can’t see the total cost upfront or when payment forms don’t autofill. Requirements analysis techniques like Event Storming help you map how checkout really happens.

Step 3: Model the domain and workflows. Turn those events into structured flows and boundaries. User Needs Mapping or simple swim-lane diagrams show who does what when. For checkout, you’d model states, actors, and decision points.

Step 4: Specify requirements with precision. Write atomic, testable requirements following INCOSE rules: one requirement per idea, use “shall,” assign a unique ID, include rationale and priority, and specify a verification method. For the checkout flow, you might write:

For the checkout flow, you might write requirements like these:

| Requirement ID |

Specification |

Priority |

Rationale |

Verification Method |

| REQ-CHK-001 |

The system shall display the total order cost before the user enters payment details |

High |

Reduces cart abandonment |

UI test + analytics validation |

| REQ-CHK-002 |

Payment authorization shall complete within 3 seconds at 95th percentile for up to 500 concurrent users |

High |

User retention |

Load test |

| REQ-CHK-003 |

The system shall support PCI-DSS-compliant tokenization for credit card data |

Critical |

Compliance |

Security audit + pen test |

Step 5: Create acceptance criteria and examples. Turn each requirement into concrete scenarios that clarify edge cases like these:

| Scenario |

Given |

When |

Then |

| Standard checkout |

$50 cart, $5 promo, 8% tax, $10 shipping |

User reaches checkout |

Shows $58.00 total before payment |

| Stacked promotions |

$100 cart, 10% + $15 discounts |

User reaches checkout |

Shows correct total with both discounts |

| Free shipping |

$75 cart (meets threshold) |

User reaches checkout |

Shows $0.00 shipping |

These examples become your Gherkin-based automated tests and living documentation.

Step 6: Trace and baseline. Link each requirement to epics, code, tests, and releases. Use a traceability matrix or native tool links in Jira or Azure DevOps. When someone asks “Which tests cover REQ-CHK-002?” you answer in one click. Baseline at each increment to prevent silent scope creep.

Step 7: Validate and manage change. Review specs with stakeholders to confirm they match intent. When changes arrive, check impact via trace links, re-estimate, and update criteria. This keeps everyone aligned and prevents surprise gaps at demo time.

Techniques for Requirement Analysis

Modern requirements analysis tool uses a toolkit of lightweight, collaborative techniques. These keep discovery continuous and specs testable. Here’s what works in 2025.

Event storming. A facilitated workshop where you plaster a wall with sticky notes representing domain events: User Adds Item to Cart, Inventory Reserved, Payment Authorized, Order Shipped. You sequence these on a timeline, identify actors who trigger them, policies that connect events, and pain points where things break. It aligns cross-functional teams fast and builds shared domain language. Use it at the start of big features or when onboarding new team members.

Entity-relationship diagrams. These model your data structures and relationships. An ERD shows how entities connect:

| Entity |

Relates To |

Relationship |

| User |

Order |

One-to-many |

| Order |

OrderItem |

One-to-many |

| Product |

Inventory |

One-to-one |

| User |

PaymentMethod |

One-to-many |

This clarity prevents ambiguity. Modern tools like aqua cloud let you generate draft ERDs from existing schemas, but collaborative modeling sessions catch gaps early.

User stories with acceptance criteria. These fill the gap between high-level needs and testable specs. A story follows: As a role, I want a capability, so that the outcome. Acceptance criteria make it verifiable: Given a user with saved cards, when they reach checkout, then they see saved methods, select one, and complete the purchase without re-entering CVV unless required for security.

Data flow diagrams. Shows how data moves through your system: processes, data stores, external entities, and flows. DFDs spot bottlenecks and security boundaries. Useful in regulatory contexts where you need to document data lineage.

Quality models and NFR templates. Formalize non-functional requirements with measurable criteria. Instead of the app should be fast, write API p95 latency ≤ 300 ms under 2,000 RPS, measured via Apache JMeter. Tie each NFR to a verification method so QA knows how to validate.

Various requirements analysis methods and requirements analysis methodology approaches can be selected based on your project complexity and team culture.

Challenges in Requirement Analysis

Ambiguous or incomplete requirements. Stakeholders use vague terms like secure, fast, or user-friendly without defining what these mean. Teams interpret them differently, leading to wasted work building the wrong solution.

Changing stakeholder needs. Requirements shift through Slack messages or hallway conversations, but specs never get updated. Traceability breaks, test coverage drifts, and release plans fall apart.

Misalignment among parties. Product wants speed, engineering wants solid architecture, QA needs testability, ops demands observability, and compliance requires audit trails. Without shared documentation, each group optimizes for themselves.

Tooling and documentation drift. Requirements live in Confluence, user stories in Jira, designs in Figma, tests in TestRail. No links between them. Keeping connections current requires discipline.

Best Tools for Requirements Analysis fo 2026

The right requirements management tool doesn’t just store requirements. It enforces traceability, automates ambiguity checks, and integrates with your delivery pipeline. Here’s what’s working in 2025.

- aqua cloud: A modern, AI-powered test management and requirements platform purpose-built for QA teams. aqua cloud stands out by combining requirements management, test case design techniques, execution tracking, and defect management in a single, unified interface. With aqua, your requirements trace to tests, builds, and releases with ease. Its AI Copilot accelerates requirement refinement by suggesting acceptance criteria, flagging ambiguity, and generating test cases from specs. You get built-in traceability dashboards, real-time collaboration, and integrations with Jira, Azure DevOps, GitHub, and CI/CD pipelines. aqua cloud delivers end-to-end visibility without the overhead for your team.Speed up your requirement testing by 80% with AITry aqua for free

- Jama Connect: Enterprise-grade requirements and test management with =traceability, review workflows, and compliance support. Useful teams operating in regulated industries where audit trails and change control are non-negotiable.

- DOORS Next: The legacy heavyweight for complex systems engineering. Offers baselining, versioning, and MBSE integrations. Steep learning curve, but if you’re building satellites or autonomous vehicles, the rigor may pay off.

- Jira + Confluence: The combo most agile teams use. Jira for backlog/stories, Confluence for detailed specs and glossaries. Not purpose-built for requirements, but plugins like Xray add traceability and test linking. Works if you invest in governance and link discipline.

A good thing about Jira and Confluence is that they can connect with other tools from the list for advanced capabilities. For example, aqua cloud has out-of-the-box integration with Jira.

- Azure DevOps: Microsoft’s integrated suite with Boards for backlog, Repos for code, Pipelines for CI/CD, and Test Plans for testing. Requirements live as work items with bidirectional trace links to commits, builds, and test cases. Best if you’re already in the Microsoft ecosystem.

- Lucidchart / Miro: Collaborative diagramming for Event Storming, ERDs, DFDs, and user journey maps. Not requirements managers, but critical for elicitation and modeling workshops. Export diagrams as artifacts linked from your main requirements repo.

- Gherkin-based BDD tools: Turn acceptance criteria into executable specs with tools like Cucumber, SpecFlow, and Behave. These are where your requirements live in practice. Validated every build, updated every sprint. Integrate with aqua cloud, Jira, or TestRail for full traceability.

Pick tools that integrate with your existing stack, enforce traceability by default, and don’t turn requirements into a separate silo. The best tool is the one your team actually uses and keeps current.

Best Practices for Requirement Analysis

Here’s what separates high-performing teams from those perpetually stuck in clarification loops.

1. Engage stakeholders early and continuously. You can’t just hold a kickoff meeting then disappear for three sprints. Your team needs continuous discovery: weekly interviews, analytics reviews, and quick experiments with your product trio. This keeps requirements grounded in real user needs and catches pivots before they derail work. When you run collaborative sessions like Event Storming or Example Mapping, you surface domain language, edge cases, and conflicts in real time.

2. Use clear, precise language and enforce a shared vocabulary. Your team should maintain a living glossary of terms, units, and SLAs. When everyone agrees checkout means cart-review-to-confirmation and fast means <300 ms p95, interpretation drift disappears. Following INCOSE writing rules helps:

- One requirement equals one idea

- Use shall for mandatory items

- Avoid TBDs

- Include measurable acceptance criteria

- Ban vague terms like user-friendly or robust unless you define them with metrics

When there are no requirements, I explore. Even if I have clear requirements, I still explore — it’s how I “test” them too.

3. Prioritize ruthlessly using frameworks like WSJF, Kano, or Cost of Delay. Not all requirements carry equal value or risk. You need to distinguish hygiene factors from delighters. When you sequence work to deliver high-value, high-risk items early, you validate assumptions while pivoting is still cheap.

4. Document everything with traceability from day one. Every requirement your team writes needs a unique ID, rationale, priority, acceptance criteria, and verification method. You should link requirements to parent epics, design decisions, code modules, test cases, and defects. When you use a Requirements Traceability Matrix or native tool links, impact analysis becomes one query away. Baselining requirements at each release prevents silent scope creep.

5. Validate and review iteratively. Your requirements will evolve. Run peer reviews to catch ambiguity, feasibility issues, and conflicts. You can use static analysis tools or LLM-assisted checks to flag vague language. When changes arrive, assess impact via trace links, re-estimate, and communicate deltas. Close the loop with acceptance tests. If your team can’t prove a requirement in a test, it’s not done.

You may be additionally interested in exploring requirement volatility in software development in another guide by aqua.

6. Adopt executable specifications. When you turn acceptance criteria into Gherkin scenarios, they increase as automated tests and living documentation. Executable specs make drift visible instantly. Failing tests tell you requirements changed or weren’t met.

Effective requirements analysis can notably help with successful software development by connecting vague requests and precise, testable specifications. aqua cloud, an AI-driven test and requirement management platform, eliminates process friction with its integrated approach to management and testing. Aqua’s comprehensive traceability ensures that every requirement is linked to corresponding test cases. This gives you instant visibility into coverage gaps and impact analysis when requirements change. aqua’s domain-trained AI Copilot transforms requirements into structured test cases in seconds, using your project’s documentation to create deeply relevant scenarios. The platform provides a unified environment, meaning you no longer need separate tools for requirements, test management, and defect tracking. aqua connects with solutions you already rely on through integrations with Jira, Confluence, Azure DevOps, GitHub, and Selenium and others via REST API. With aqua, keeping requirements synchronized across platforms is easy.

Achieve 100% requirements coverage and reduce rework by up to 97% with aqua’s unified testing platform.

Try aqua for free

Conclusion

Requirements analysis helps you avoid rework, confusion, and those last-minute changes that slow everything down. When you treat it as an ongoing, collaborative process with clear goals, everything stays aligned. By using simple methods like Event Storming or Example Mapping, you deliver faster and face fewer surprises. Involve stakeholders early, write testable specs, and validate often. With tools like aqua cloud linking requirements and testing, analysis becomes your advantage.