In today’s fast-paced digital world, performance issues are one of the most significant factors impacting the user experience. Poor UX means lost revenue and damage to the company brand, so keep reading to learn about significant performance testing pitfalls.

What is performance testing, and why is it important?

Performance testing is the evaluation of an application’s performance under specific conditions, like user load, data volume, and network latency. With performance testing, you can identify bottlenecks, bugs, or other issues affecting the application’s speed, scalability, and reliability. Replicating real end-user conditions in a test environment is how you spot and address performance weaknesses before customers suffer from them.

"If the end user perceives bad performance from your website, their next click will likely be on your-competition.com."

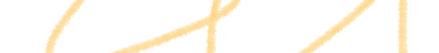

Critical mistakes in performance testing

Now, let’s dive into six major performance testing mistakes that will haunt you and your bottom line if you don’t avoid them.

Testing performance is only one aspect of the development cycle. You should definitely still understand other core areas of the testing methodology while specializing in non-functional testing.

1. Not defining clear testing objectives

One of the most common QA testing mistakes in performance testing is not defining clear testing objectives. Without clear objectives, you will struggle to determine the following:

- What to test

- How to test it

- What metrics to use to measure performance

This will result in inaccurate or incomplete results that don’t provide the necessary insights to optimise the application’s performance. Additionally, unclear objectives can lead to wasted resources, as the testing team may spend time and effort testing irrelevant or non-critical aspects of the application.

Solution: To avoid this mistake, establishing a performance testing strategy before beginning the testing is crucial. Here are some tips:

- Define the testing purpose, including specific goals and metrics to measure performance.

- Identify critical scenarios that represent real user interactions with the application.

- Determine the types of performance tests to conduct, like stress or load testing.

- Ensure the testing environment accurately reflects the real-world scenarios the application will face.

- Use the best tools for performance testing that align with the defined objectives.

2. Not using realistic test data

Another common performance testing mistake is not using realistic test data. When testing with unrealistic or synthetic data, you can’t accurately simulate real-world conditions and predict the application’s performance in production. This will result in missed performance issues and bottlenecks when the application is deployed in a live environment.

Solution: Here are some tips on how to avoid this mistake:

- Collect real-world data that represents users’ characteristics, behaviours, and interactions

- Use a mix of data types and sizes that closely match production data

- Here’s the thing about test data: you need to mirror what’s actually happening in production, not what you think is happening. Throw in those power users who generate 50x more activity than your average customer, plus those massive datasets that make your system sweat. Pull real production metrics first. Check your database for the heaviest users and largest data volumes from the past quarter. Watch for the temptation to test with “representative samples”; they almost never represent the chaos that breaks systems. Your edge cases aren’t edge cases if they happen daily.

- Create test data covering various scenarios and edge cases, including peak loads and extreme values

- Avoid using synthetic data generated by tools or scripts, if possible

- Use GPT-based solutions to quickly generate a data set that has the same key properties that your real user data does. Depending on the jurisdiction and industry regulations, you could also create a dataset inspired by the real data of your users

- Regularly update the test data to meet the application’s changing requirements

To avoid the common mistakes of missing performance issues, aqua’s AI Copilot offers a solution that can save time and improve the accuracy of your testing. With features such as creating entire test cases from requirements, removing duplicate tests as well as identifying and prioritising essential tests, aqua’s AI Copilot can help ensure your performance testing is efficient and effective.

Upgrade your testing game with aqua AI Copilot today

3. Neglecting test environment

The third most common performance testing pitfall on our list is not keeping the test environment up-to-date. The proper testing environment is critical in performance testing and can significantly impact the test results. Invalid test results will make you draw unreliable, if not outright wrong, conclusions about the application’s performance.

Solution: Here are some tips to avoid this mistake:

- Replicate the production environment as closely as possible.

- You’ll save yourself major headaches by using identical infrastructure-as-code templates for both test and production environments. This keeps your hardware specs, configs, and network setups perfectly synced — no nasty surprises when you go live. Environment drift creeps in when someone manually tweaks “just one small setting” in production. Stick to code-only changes and you’ll dodge those 2 AM emergency calls.

- Test the application in multiple test environments to account for variations in production environments.

- Use a dedicated test environment separate from development, staging, or production environments.

- Monitor the test environment closely during testing to keep it stable and consistent.

Testing in Production-Like and Evolving Environments

Your performance testing environment needs to stay current with your rapidly shifting software stack and virtualised infrastructure. Production systems change fast, and new processes pop up, resource contention shifts, and dynamic workloads can completely throw off your test assumptions.

Start by setting up automated alerts when your production environment changes by more than 20% in key metrics like CPU allocation or memory usage. Most teams miss this and end up testing against outdated specs.

Use environment monitoring and log analysis to match your test conditions with actual live usage patterns. Schedule monthly check-ins with all stakeholders to catch those sneaky late-breaking changes that somehow slip through. Infrastructure monitoring tools can spot configuration drift before it becomes your problem, and catching unknown bottlenecks in testing beats explaining outages to users.

One thing that might surprise you: the biggest environment mismatches often come from seemingly minor updates that cascade into major performance differences. Keep your test environment specs documented and version-controlled just like your code.

4. Not testing for peak loads

The next most common mistake in performance testing is not testing for peak loads. If you only test for average loads and do not account for peak usage scenarios, it will lead to potential performance issues during high-traffic periods.

Solution: To avoid the mistake of not testing for peak loads, here are some tips:

- Identify the peak usage scenarios for your application and design performance tests to simulate them.

- Conduct stress testing to determine the maximum capacity of the application. Ensure that it can handle peak loads without performance difficulties.

- Analyse the performance test results to identify performance bottlenecks and optimise the application accordingly.

- Use cloud-based testing platforms that simulate high traffic volumes from different locations.

- Test beyond expected peaks to ensure your application can handle sudden spikes in traffic, especially for B2C solutions that may go viral or experience unexpected surges in user activity. Failing to handle such traffic can lead to lost opportunities and negative reviews that would hurt the application’s reputation.

5. Not testing early enough

Among the performance testing mistakes, the next one is not testing early enough in the development lifecycle. If you wait until the later stages of development to conduct performance testing, you will lose time and money and need to rework the solution if performance issues are discovered.

To avoid this, you should do the following:

- Conduct performance testing early and often.

- Use an automated performance test tool to streamline testing and provide timely feedback.

- Integrate performance testing into your CI/CD pipeline to catch performance issues before production.

6. Not analysing the root cause

The sixth common mistake in performance testing is not analysing the root cause of performance issues. Testers may identify performance issues during testing, but if devs don’t analyse the root cause of the problem, it gets harder to resolve the issue occurring in production, leading to drastic consequences and becoming even harder to pinpoint.

Solution: There are some steps you can take to avoid this mistake:

- Identify the specific performance metrics important for your application and monitor them closely.

- Use monitoring tools to track performance metrics in real time during testing.

- Once you identify the root cause, develop a comprehensive solution that addresses the underlying issue rather than just treating the symptoms.

- Finally, validate the solution’s effectiveness by retesting and ensuring you have resolved the performance issue.

7. Overlooking scalability testing

When you overlook the scalability testing, the potential for system failure under increased loads remains unaddressed. You should practically see and understand how a system handles growth to ensure it can scale effectively. In scalability testing, you assess the system’s performance as the workload grows, identifying its breaking points and determining whether it can accommodate increased demands.

Solution:

The solution is simple. You should conduct thorough scalability tests that gradually increase the load, replicating scenarios that mimic future usage patterns. By doing so, you can unearth potential bottlenecks early, allowing for optimisation and enhancement of the system’s ability to handle expanding user bases or data volumes.

8. Ignoring network latency

When you disregard network latency during performance testing, you overlook a critical factor that profoundly impacts your application’s reliability in real-world scenarios. The failure to simulate diverse network conditions can lead to inaccurate performance assessments.

Solution:

The solution is embracing network emulation tools to mirror varied network speeds, latencies, and conditions. You gain insights into your application’s behaviour under these conditions by replicating different network scenarios. This comprehensive evaluation aids in optimising your system to perform consistently across various network environments, ensuring a smoother user experience.

9. Disregarding third-party integrations

Neglecting to test third-party integrations thoroughly creates blind spots in understanding how your system performs when interacting with external services or APIs. This oversight can lead to unforeseen bottlenecks or vulnerabilities.

For instance, suppose an e-commerce platform integrates a third-party payment gateway without thorough testing. If the integration is flawed and doesn’t handle certain payment scenarios correctly, it might result in transaction failures, leaving customers unable to complete purchases. This bottleneck disrupts the user experience and impacts revenue generation for the business.

Solution:

To avoid this mistake, you should dive into comprehensive testing of integration points and test each integration independently and alongside your primary system. This thorough assessment allows you to identify potential issues and fortify your system’s performance, ensuring its robustness even when dealing with external services.

10. Not doing continuous tests

Neglecting to include performance testing throughout your development process is like constructing a building without periodically checking its foundation. When performance tests aren’t integrated into your continuous development pipeline, you’re essentially delaying the discovery of potential performance issues until later stages. This delay can lead to higher costs and efforts for fixing problems that could have been caught earlier.

Solution:

To avoid this performance engineering mistake, you should embed automated performance tests within your testing pipeline and consistently monitor the system’s behaviour. Detecting issues early allows for swift resolution, preventing minor glitches from snowballing into major setbacks during production. This proactive approach ensures a stable and reliable system and saves valuable time, resources, and expenses that would otherwise be spent rectifying issues discovered too late in the development cycle.

Performance Quality Gates in CI/CD

Performance testing isn’t something you bolt on later; it needs to live right inside your CI/CD pipeline. Smart teams now run performance checks with every commit, setting up automated gates that’ll block any build if response times spike or error rates jump above baseline thresholds.

Add one simple performance test that measures your app’s core user flow. Let it run for two weeks to establish your baseline, then set your gate at 20% above that average. This way, your developers start thinking about performance before they even write code. When builds get rejected automatically for being slow, performance becomes everyone’s job, not just something QA worries about later.

Conclusion

Practical performance testing requires careful planning, realistic test scenarios and data, and proper analysis of performance issues. If you fail to follow these steps, you will face performance bottlenecks, reduced application scalability, and poor user experience. By avoiding the common mistakes in performance testing mentioned above, you can ensure that your applications are well-equipped, deliver seamless user experiences, and avoid the costly impact of performance-related issues.

If you are looking for actionable tips to improve your testing processes and choose the right tools, aqua has got you covered. aqua’s eBook is the ultimate resource for anyone who wants to improve their testing practices. This eBook includes a test strategy template that requires minimal changes, requirements for every level of testing, and practical tips to enhance your testing workflows. With the recommendations for choosing the right tools, you can take your testing to the next level.

Download our comprehensive eBook to start your transformation for testing processes from today