Key Takeaways

- Requirements elicitation uncovers what stakeholders actually need rather than just what they say they want.

- Software requirements fall into three categories: functional, non-functional, and system requirements.

- Effective elicitation combines multiple methods. Interviews provide rich context while surveys scale prioritization.

- Clear project vision statements serve as decision-making anchors for feature alignment.

- QA participation in elicitation surfaces testability gaps and validates acceptance criteria early.

Even the most well-defined requirements can falter when teams lack a shared vocabulary or when project boundaries remain unclear. Discover how to avoid these pitfalls and master the eight proven requirement elicitation strategies👇

Understanding Software Requirements

Software requirements are the documented needs and constraints that a system must satisfy. Think of them as the medium ground between what stakeholders envision and what your dev team will actually build. They define scope, drive design decisions, and become the yardstick your QA team uses to verify that what shipped is what was promised.

Requirements fall into three buckets:

- Functional requirements. They describe what the system does: “Users can filter search results by date range” or “the API returns a 404 for missing resources.”

- Non-functional requirements. These cover the how: performance benchmarks, security constraints, usability standards, and scalability targets. These often get short shrift during the elicitation of requirements, but they’re critical for defining quality attributes.

- System requirements Such requirements describe the environment and infrastructure needs: OS compatibility, browser support, third-party integrations, and hardware specs. Miss these and you’re troubleshooting “works on my machine” bugs in production.

For example, a functional requirement might state: “When a user submits an invalid email format, the system displays an inline error message within 100ms.” The related non-functional requirement would specify response time and accessibility, while the system requirement confirms it works across Chrome 120+, Firefox 115+, and Safari 17+.

The Requirements Elicitation Process Explained

The requirements elicitation process follows a structured approach to discovering, analyzing, and documenting what your system needs to do. Understanding requirements management vs engineering helps clarify where elicitation fits in the broader development lifecycle.

The process starts with stakeholder identification. Map who has skin in the game, from end users and product owners to compliance teams and ops folks. You should not simply list names. Instead you should understand their concerns, their language, and their constraints so you can ask the right questions later. QA teams participate as stakeholders to ensure testability concerns are represented from the start.

Once you know who to talk to, requirements analysis kicks in. Apply various requirement elicitation techniques to extract both explicit needs and the tacit stuff people assume you know. Good analysis means probing beneath surface requests to uncover the actual problem. If someone asks for faster search, are they really asking for better relevance, fewer clicks, or just frustrated because the current UI is clunky? The goal is to translate raw input into clear, testable statements. It should pass the “necessary, unambiguous, verifiable” bar set by ISO/IEC/IEEE 29148. QA teams review requirements during this phase to validate testability.

Documentation is where those analyzed needs become formal artifacts: user stories, use cases, specifications, acceptance criteria. Keep it lightweight enough that people will actually read it, but rigorous enough that your QA team can trace a failed test back to a specific requirement. Requirements management handles the ongoing work: tracking changes, maintaining traceability from requirement to code to test, and handling the inevitable scope creep.

Best practices for keeping this process humming:

- Confirm early and often: Send stakeholders a recap after each session and ask, “What did we miss or misstate?” It catches misunderstandings before they calcify into code.

- Maintain traceability: Link each requirement to its source and downstream artifacts. When priorities shift, you’ll know exactly what’s impacted.

- Version everything: Requirements drift. Track who requested what change, when, and why.

- Apply quality gates: Run every drafted requirement through a 29148 lint check before it goes into the backlog. Is it clear, verifiable, and feasible?

When you elicit requirements, having the right tools in your tech stack makes all the difference. aqua cloud, a dedicated test and requirement management platform, centralizes all your requirements in a collaborative platform accessible to stakeholders 24/7. The platform uses AI-powered assistance to transform raw input into structured documentation. aqua’s domain-trained AI Copilot generates requirements grounded in your project’s actual documentation. It uses RAG technology to ensure outputs speak your project’s language and reflect your specific industry standards. Full traceability automatically maps requirements to test cases and visually identifies coverage gaps. Whether you conduct interviews, workshops, or document analysis, aqua keeps everything connected and eliminates communication breakdowns. With 14 out-of-the-box integrations, including Jira, Confluence, and Jenkins, getting started with aqua is always easy.

Save 12.8 hours per week per team member with a dedicated elicitation solution

Best Methods & Strategies for Requirements Elicitation

There’s no universal rules when choosing requirement elicitation strategies. What works for a greenfield project won’t cut it for a legacy system refactor where the original architects retired five years ago. Basically, you should match your elicitation technique to the problem. As such, exploratory work demands depth, prioritization needs breadth, and alignment requires collaboration. Recent field research confirms what practitioners have known for years. Interviews surface the deepest rationale and uncover tacit needs, surveys scale prioritization across hundreds of stakeholders, and focus groups work if facilitation is tight.

The technique is not the only thing that makes these requirement elicitation methods effective. Track discovery depth, coverage, prioritization quality, speed, and alignment. When you combine complementary elicitation methods you basically create a multi-angled view. The key benefits of requirements management become clear when you properly apply these techniques of elicitation.

No technique guarantees a successful outcome, but following a specific technique in a disciplined manner has three major advantages. First, if it is applied throughout the process, it will tend to improve the consistency and coherence of the full requirements set and filter out extraneous and irrelevant requirements. Second, participation in repeated requirements discussions will lead users to adopt a similar discipline among themselves.

Brad Bigelow

Posted in

Quora

1. Documenting a Clear Project Vision

Your vision statement needs to be concrete enough that your team can use it to make trade-off decisions. Try this template:

“For [target user group], who [user need or pain point], the [product/feature name] is a [product category] that [key benefit]. Unlike [existing alternatives], our solution [unique value proposition].”

Pair that statement with a lightweight roadmap: phases, milestones, and success metrics. A simple story map showing “Q1: Core execution visibility, Q2: AI-assisted failure triage, Q3: Integration with Jira” gives stakeholders context for when their needs might land. Having a solid requirements management plan ensures your vision translates into actionable work. QA teams should review vision statements to understand quality priorities and testing scope.

2. Surveys and Interviews with Users

Surveys and interviews are your one-two punch for combining depth and scale. Interviews give you the rich, messy context, such as:

- Why someone’s workaround exists

- What constraints they’re operating under

- The edge case that breaks everything.

Surveys let you validate those findings across dozens or hundreds of users and quantify priorities. Use them as complements rather than substitutes. QA teams should participate in interviews to identify edge cases and validate acceptance criteria.

Here are the best practice to run effective virtual interviews:

- Recruit for coverage: Ensure you have participants across roles, experience levels, and use contexts.

- Send a one-page brief up front: Include purpose, topics you’ll cover, rough timebox, recording consent, and the tools you’ll use.

- Open with context reinstatement: “Walk me through the last time you debugged a flaky test.” Ground the conversation in real behavior, not hypotheticals.

- Use screen-sharing ethnography: Have participants demonstrate tasks while narrating their thought process when you can’t observe in person.

- Don’t treat AI transcripts as gospel: Tools like Teams Copilot and Zoom AI Companion can auto-generate transcripts, but confirm key statements verbally and follow up with member-checking.

- Apply progressive probing: Start with facts, then feelings, then failures and workarounds, then constraints, then success metrics.

Crafting engaging surveys: Build from qualitative findings first. Don’t invent questions in a vacuum. Write items that map to decision-making: trade-offs, frequency, and severity. Use attention checks, logic branches, and balanced Likert phrasing to catch careless responses. Pilot with 10–15 users before the full launch. When analyzing responses, combine importance × satisfaction scoring to surface high-ROI ecilitate requirements. Publish your prioritization rationale back to stakeholders.

3. Observing Users in Focus Groups

Focus groups and contextual observation let you watch how people actually work, not how they think they work. Observation is gold for uncovering workarounds, inefficiencies, and environmental constraints that never come up in interviews. Focus groups create a collaborative dynamic where one participant’s comment sparks another’s insight. The way to elicitate requirements work particularly well when you need to elicit requirements that users struggle to articulate.

For remote focus groups:

- Keep the headcount small: Limit to 4-7 participants to avoid chaos and ensure everyone gets airtime.

- Use digital whiteboards with pre-built templates: Include anonymous sticky notes, silent brainstorming rounds, and dot-voting for prioritization.

- Structure sessions with clear prompts: “Think about the last time a critical test failed in production. What information did you need immediately?”

- Time-box each activity: Use breakout rooms for sub-discussions and rotate reporters so no single person controls the narrative.

Documentation needs to be tight. Capture not just what people say but the context: screenshots of their workflows, snippets of workaround scripts, the sequence of tools they bounce between. Tools with AI clustering can turn a chaotic board of sticky notes into grouped themes, but you still need human validation. After the session, synthesize your observations into draft requirements and check them against the ISO/IEC/IEEE 29148 quality bar.

4. Brainstorming Sessions with Stakeholders

Set a specific goal for each session such as:

- Generate 20+ ideas for reducing test flakiness.

- Identify assumptions we’re making about user behavior.

Prompts like “How Might We” may also be useful to frame challenges as opportunities. Start with silent brainstorming. Give everyone 5–10 minutes to jot ideas on digital sticky notes anonymously. This levels the playing field and avoids anchoring bias. Then cluster ideas, use AI tools to draft themes, and let the group refine. Move to dot-voting to surface top priorities.

Facilitation matters. Assign a neutral facilitator to manage time, redirect tangents, and call out when the group is converging too early. Capture decisions and non-decisions explicitly. Export your whiteboard artifacts into a draft requirements doc immediately. Strike while the iron’s hot and the shared context is fresh.

5. Prototyping and Mockups

Prototypes are rough, clickable sketches that people can interact with. They don’t need to be polished. Low-fidelity wireframes in Figma are often enough to validate workflows and interaction patterns. For QA-focused tools, even a static mockup showing “test results dashboard with filters and drill-down to logs” can surface questions like, “Do we show in-progress tests?” Using a requirements engineering tool alongside your prototyping efforts helps maintain consistency.

How to run prototype walkthroughs:

- Use collaborative prototyping tools with AI assists: FigJam and Miro can turn sticky-note brainstorms into rough wireframes.

- Treat outputs as conversation starters, not specs: Walk through the prototype in a live session.

- Have stakeholders narrate their actions: Ask them to explain what they’d do, where they’d click, what they’d expect to happen.

- Record hesitations and suggested tweaks: Watch for “Wait, where’s…?” moments.

- Translate interactions into requirements: “User can filter test runs by environment, status, and date range” becomes testable because you’ve already validated the UI affordance.

QA teams should review prototypes to validate testability and identify missing states like error conditions.

6. Document and Process Analysis

Start with structured artifacts: existing requirements docs, API specs, architecture diagrams, test plans. Then contrast them with operational reality, e.g., support tickets reveal pain points the original spec missed while production logs show how features are actually used. Look for patterns. If the same type of bug keeps escaping, there’s probably a missing non-functional requirement around validation or error handling.

Process flows and workflow diagrams are gold for eliciting implicit requirements:

- Map the current state: How does a test request move from Jira ticket to CI execution to results dashboard?

- Identify handoffs and bottlenecks: Where do things get stuck?

- Extract implied requirements: Each step implies requirements around data formats, APIs, permissions, and notifications.

- Validate findings with process owners: “I see in the ticket queue that test environment setup failures are the top blocker. Is that still true? What would ‘good’ look like?”

- Capture refined requirements: Trace them back to source documents and flag anything outdated or contradictory for stakeholder resolution.

7. Apprenticing and Job Shadowing

For QA contexts, shadowing means sitting with a tester during a release cycle. Observe how they manually verify smoke tests, how they triage failures, and what information they pull from logs or dashboards. You’ll spot friction points in real time. Maybe they’re copying test IDs between systems because there’s no API integration, or they’re rerunning flaky tests three times because the retry logic is unreliable. Each workaround represents a requirement in disguise.

Remote shadowing tips:

- Use screen-sharing with narration: Ask the user to walk through a typical task while thinking aloud, pausing to explain decisions.

- Look for specific behaviors: Hesitations, workarounds, copy-paste actions, and places where the user checks external references.

- Synthesize and validate: After shadowing, draft requirements and confirm: “I noticed you manually check three different dashboards for test coverage. Would a unified view help, or is there a reason you need them separate?”

8. Questionnaires and Checklists

Questionnaires and structured checklists are your scalable, asynchronous fallback when you can’t get everyone in the same room and need to gather input without real-time facilitation. They’re not as useful as interviews, but they’re efficient for baseline data collection and reaching stakeholders who work across time zones.

Best practices for writing requirement elicitation checklists:

- Use standards-based checklists: ISO/IEC/IEEE 29148 and BABOK remind you to ask about functional, non-functional, interface, data, regulatory, and operational requirements.

- Walk through the list with stakeholders: Flag anything marked “critical” and probe for specifics.

- For test automation platforms, include: Environment setup requirements, test execution triggers, reporting granularity, notification mechanisms, security/access controls, scalability targets, disaster recovery, and third-party integrations.

Here’s an example approach to analyze questionnaires and checklists:

- Cluster similar answers: Identify outliers and cross-reference with other elicitation data.

- When you spot a strong signal: Schedule follow-up sessions to explore solutions. Don’t treat questionnaire findings as final requirements.

- Treat responses as hypotheses: The goal is efficient breadth, then targeted depth.

Be smart, ask good questions, understand what people really want to achieve (not just what they say they want). Keep good notes, link to them from subsequent docs.

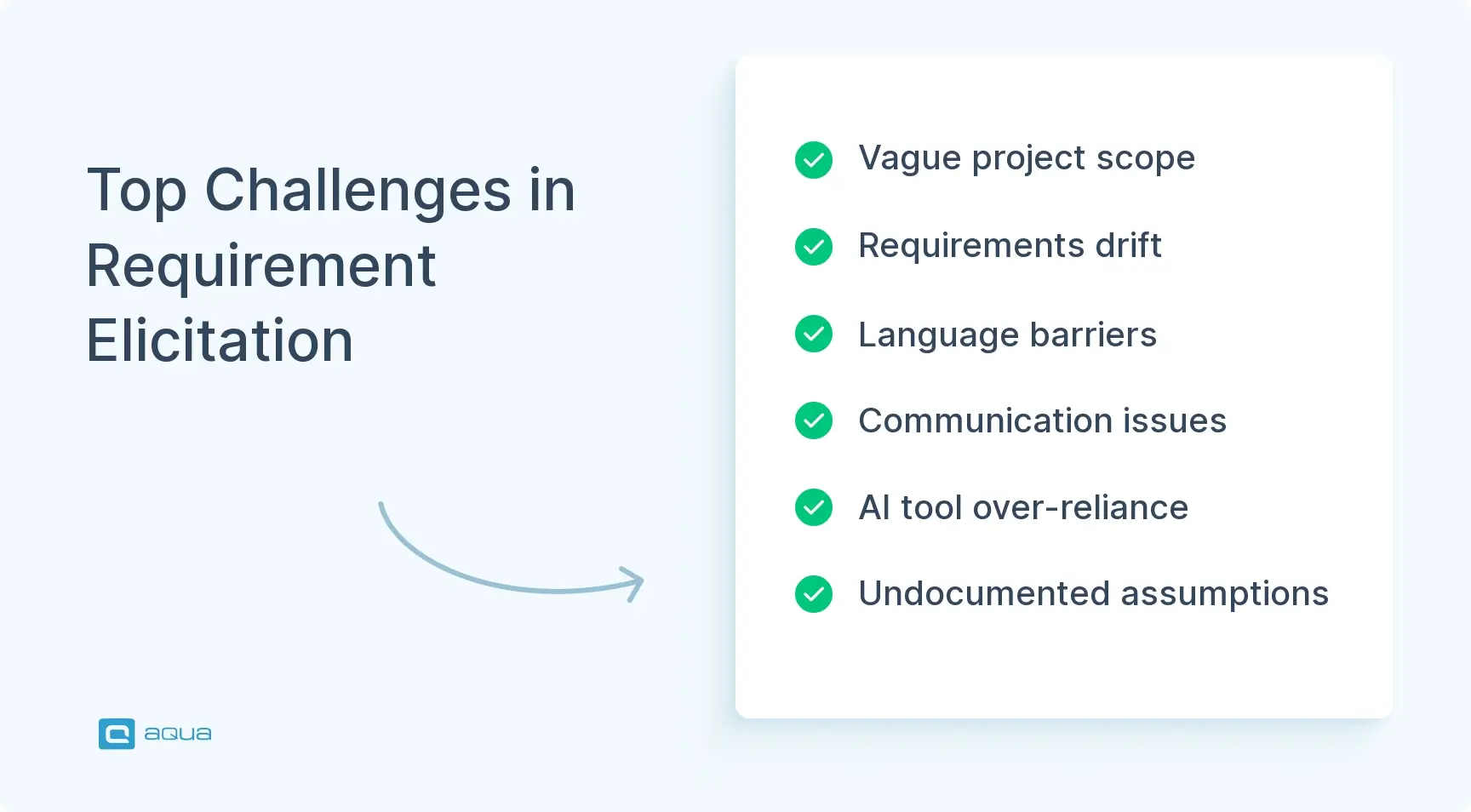

Challenges in Requirements Elicitation

Even with the best requirement elicitation methods, projects encounter obstacles. Here are the most common challenges teams face:

Vague Project Definition

Leadership requests “better test coverage” without defining what “better” means or which scenarios matter most. Without clear boundaries, elicitation sessions produce feature lists that don’t address coherent problems.

Lock in three things before your first session:

- Define the specific problem you’re solving

- Document what success looks like with measurable criteria

- List what’s explicitly in scope and what’s out

Get sign-off from sponsors, then use these as filters for every proposed requirement.

Requirements Drift

The market shifts, competitors launch features, internal priorities change mid-sprint. Some change is inevitable. Uncontrolled change damages project outcomes.

Establish strategies for managing requirements changes:

- Define who can request changes and the approval chain

- Set up a forum where changes get evaluated

- Assess impact on scope, schedule, and budget before accepting

- Track who requested what change, when, and why

Language Barriers Between Teams

Product thinks in user journeys. Dev thinks in APIs and data models. QA thinks in test scenarios. Ops thinks in uptime and incident response. Same words mean different things to different people.

Create a shared vocabulary anchored to concrete examples. When someone says “fast,” ask: “In this context, does “fast” means sub-200ms response time or three clicks instead of five?”

Communication Breakdowns in Distributed Teams

Remote work means missing nonverbal cues. Stakeholders lose focus in consecutive video calls. Critical context gets lost in asynchronous communication.

Countermeasures:

- Send a screencast or doc before sessions so people arrive prepared

- Use digital whiteboards and mockups to anchor discussions

- Call on people by name to avoid the loudest person dominating

- Use chat for side questions during live sessions

- Confirm critical decisions verbally, not just through AI summaries

Over-Reliance on AI Tools

Modern collaboration platforms can cluster sticky notes and transcribe conversations. AI summaries miss context, misinterpret jargon, and sometimes generate “decisions” that never happened.

Always validate AI outputs:

- Send recaps to stakeholders and ask them to confirm accuracy

- Check each requirement against ISO/IEC/IEEE 29148 standards

- Tie conclusions back to the original session recording

Implicit Assumptions Going Undocumented

Teams assume “everyone knows” how a process works. Those assumptions don’t get written down. Six months later, someone new joins and the tribal knowledge is gone.

Make implicit knowledge explicit:

- Document why decisions were made, not just what was decided

- Capture workarounds and the constraints that created them

- Use job shadowing to spot what users have stopped noticing

Requirements elicitation techniques can be quite challenging with distributed teams and complex stakeholder needs. Many teams turn to aqua cloud, an AI-driven test and requirement management solution. aqua provides a centralized hub where all the elicitation techniques we discussed come together in one collaborative ecosystem. Its domain-trained AI rapidly transforms stakeholder input into comprehensive, actionable test cases in seconds. AI Copilot generates content grounded in your specific project documentation through RAG technology. Every generated requirement reflects your organization’s terminology, standards, and priorities. Due to that, you obtain a shared understanding that eliminates the miscommunication and assumptions behind costly rework. aqua supports both Agile and Waterfall methodologies with customizable workflows. Real-time dashboards provide instant visibility into requirements coverage, progress, and compliance. Native Jira and 13 more third-party integrations and API access ensure aqua fits into your existing tools easily.

100% requirements traceability and better elicitation with domain-intelligent AI

Conclusion

Requirements elicitation demands the same rigor and attention as any other critical development phase. Get it right, and teams build testable features that solve actual problems. Skip it, and you’re debugging miscommunication in production while your release timeline burns. The eight requirement elicitation strategies provided in the article give you a versatile toolkit grounded in what works for teams shipping software today. While Product Managers and Business Analysts typically lead elicitation, QA participation ensures requirements are testable and complete. Combine these requirements elicitation methods, measure their effectiveness, and treat elicitation as an ongoing conversation.