When you apply for a QA engineer, manager, or tester role, it is crucial to have a strong understanding of agile testing. If you can demonstrate your knowledge of agile testing, you will certainly stand out from most candidates. Even if the company does not commit to Agile testing, you still demonstrate the skills and experience to adapt to a fast-paced, modern, collaborative development environment.

However, even if you know what agile testing is and can apply it, it might not guarantee you pass the job interview. Application processes for QA positions are highly competitive, and some questions will require you to apply 120% of what you know and have experienced. The extra 20% are outright confusing or actually seemingly easy questions that are also easy enough to get wrong. Find our pointers for some of the tricky QA interview questions and answers to them below.

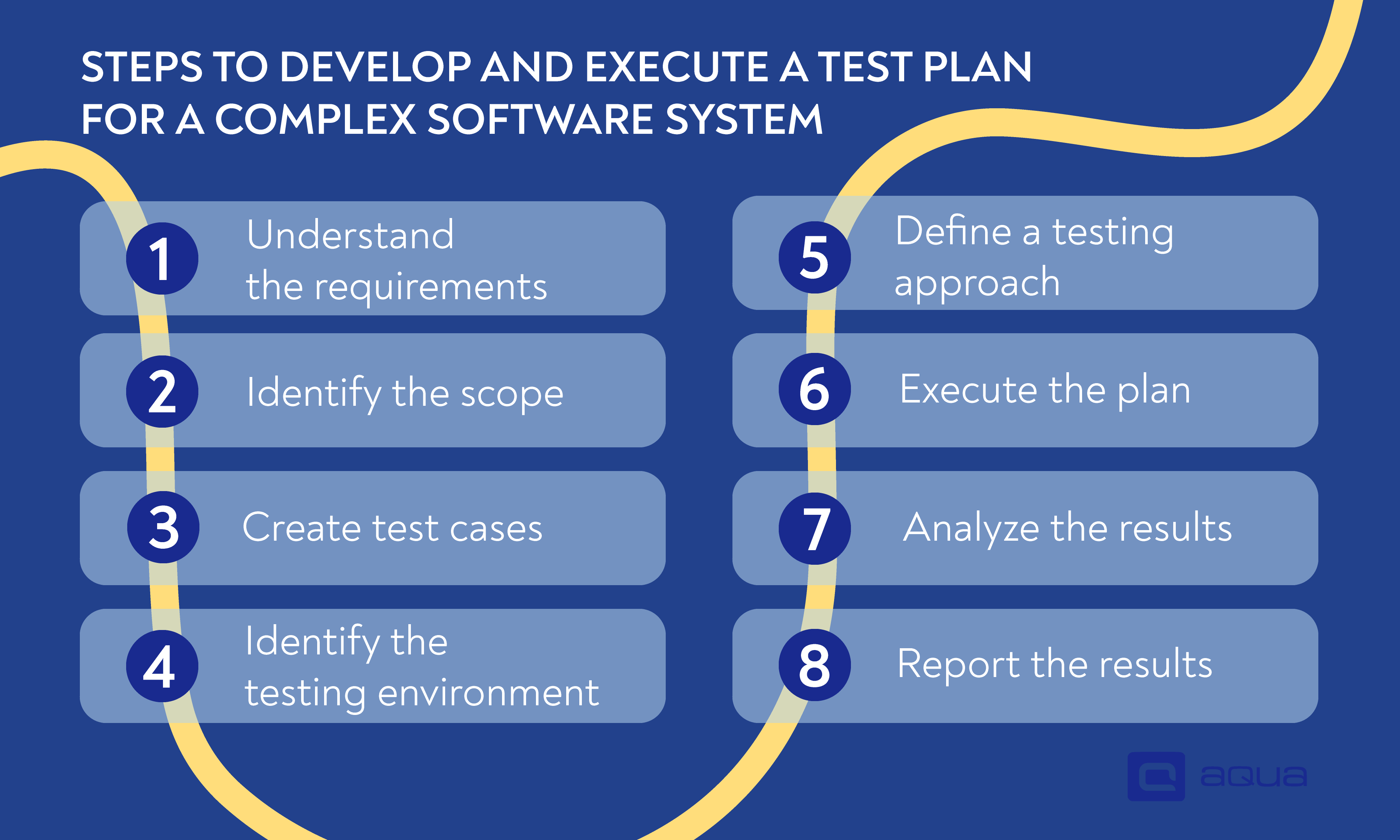

1. Describe your steps to develop and execute a test plan for a complex software system.

This one is one of the well-known, common software QA interview questions. And it requires a well-structured, extensive answer from the candidate, as well as their approach to tackling a complex problem. Also, the candidate should be able to demonstrate an understanding of software testing principles, technical knowledge, and critical thinking.

Answer: To develop and execute a test plan for a complex software system, you need to implement a strategy including these steps:

- Understand the requirements — Before you develop a test plan, it is crucial to understand both functional and non-functional requirements and identify potential risks.

- Identify the scope — Identify the main features and functionalities to test and outline the types of testing you will use.

- Create test cases — Make test cases that ensure each feature and functionality is tested thoroughly while prioritising the most critical and impactful features.

- Identify the testing environment — Your testing environment should have the required hardware, software, and network requirements for the test.

- Define a testing approach — Sort out the testing types and the criteria for software passing or failing the testing stage.

- Execute the plan — Execute your test plan while documenting each test’s results.

- Analyze the results — Verify that the software met the passing criteria and identify potential improvement areas.

- Report the results — Summarise the test results, point out discovered issues, and identify risks.

As you describe these steps to the interviewer, it would be great to touch on the business value of what you’re doing.

2. What is exploratory testing, and when do you use it?

Not all QA interview questions are about black-box or white-box testing, so you should be prepared to answer various kinds of questions, including exploratory testing. As exploratory testing is only needed in a handful of cases, it might be tricky for the candidate to come up with suitable situations.

Answer: Exploratory testing is a different approach to the testing process where you explore the application during the test rather than having a predefined plan. It requires the tester to identify the areas of dynamic improvement, issues, and potential defects. There are several instances where you might use exploratory testing:

- There are new testers in the project

- You need to minimise test script writing

- New features should be tested

- The user experience should be tested

3. How do you test a complex API that involves multiple endpoints and integrations?

This is also one of the interview questions for QA that you can come across, especially if the position requires the development and execution of a comprehensive test plan for a complex API. It might be less than trivial for some candidates as it requires the tester to have a deep understanding of API testing concepts and techniques.

Answer: Here is how you should test a complex API with multiple endpoints and integrations:

- Understand the requirements and specifications for API

- Plan performance, security, and scalability tests that you would normally use when testing an API

- Create test cases that cover endpoints and integrations, including positive and negative scenarios

- Design tests to validate API functionality

- Use advanced QA testing tools to automate the API testing process

4. How do you measure the effectiveness of your testing efforts?

As you apply for the more senior positions, you will get asked more quality assurance interview questions about the results of the cases you worked on. These questions require a mix of practical experience and theoretical knowledge.

Answer: Several QA metrics allow you to measure the effectiveness of your testing results:

- Defect detection rate: This metric is crucial because it compares the number of defects you can find during the testing to the ones found in the production stage. Higher detection indicates higher coverage and diligent execution of the test.

- Test coverage: It measures the percentage of the code or functionality you have tested. The higher coverage you have, the lower chance of facing unexpected bugs that are really expensive to fix in production.

- Test case efficiency: This metric focuses on the ratio of successful tests to the total runs. High efficiency means you have well-designed and well-executed test cases.

- Customer satisfaction: No matter what service or product you offer, customer satisfaction is a metric that should be among the most crucial to measuring your effectiveness, and testing processes are no exception. Although poor customer satisfaction does not necessarily indicate poor QA, higher customer satisfaction definitely shows that the software’s performance is good. That certainly means good QA.

With aqua, keeping track of your detection rate and test coverage has never been easier. aqua provides a comprehensive dashboard that presents your test coverage for each requirement. You can effortlessly monitor your progress with a detailed history of your test runs and identify areas of improvement. The live mode dashboard displays your defect detection rate in real-time, allowing you to identify and address issues as they arise, guaranteeing that your software is always of the highest quality.

Give it a try today and see why so many teams rely on aqua to manage their testing efforts and deliver high-quality software more efficiently

5. How would you test a mobile app that needs to work on different devices and platforms?

Among the top interview questions for QA, you can also come across the type of questions that include diversity. This question can cause problems for some candidates because it requires a modern approach to mobile testing, knowledge of various testing techniques and tools, and an understanding of complexities on multiple devices.

Answer: You can test a mobile app’s functionality on various devices via the following main steps:

- Choose a framework that supports testing on multiple devices and platforms

- Set up the testing environment

- Create a test plan

- Develop and execute test scripts

- Analyse the results

6. How would you identify and mitigate possible vulnerabilities in security testing?

Security testing questions are an essential part of QA engineer interview questions, so you should expect at least one in your upcoming interview. This question might be challenging for some candidates as it requires knowledge of security testing and the ability to think critically, proactively, and quickly about mitigating vulnerabilities.

Answer: To identify vulnerabilities in security testing, you should follow these steps:

- Conduct a thorough security assessment of the application

- Use both automated and manual techniques to identify possible vulnerabilities like cross-site scripting, buffer overflows, or injection attacks

- Prioritise potentially the most impactful vulnerabilities

- Develop a plan to tackle each one of these vulnerabilities

- Test the results of the mitigation measures and see if they addressed the vulnerabilities well

- Run security tests regularly to know the possible security issues you might have in the future

7. How do you decide when a bug is severe enough to block the release?

This is also a challenging QA interview question, as the candidate should be able to balance the factors and decide based on the bug’s severity. Being able to answer questions that require critical thinking and technical knowledge is a big plus for the candidate.

Answer: The analysis to decide whether a bug is severe enough to block the release should include these factors:

- The impact of the bug on the functionality

- The bug’s occurrence frequency

- The bug’s effect on the end user’s experience

- Reproducibility of the bug

- Data loss or security vulnerabilities caused by the bug

- Brand impact of the bug

If the bug appears too often, is easy to produce or affects the aspects mentioned above, you should consider a delay in the release to deal with the bug.

8. Have you ever faced a situation where QA was not given enough time to test a product properly? And how did you handle it?

This QA interview question can also be tricky as it requires the candidate to provide a balanced answer. The candidate should be able to express how they would keep cool under pressure, avoid conflict with their colleagues and still deliver high-quality testing.

Answer: It is not uncommon for QA management problems to occur during the testing process as, from time to time, QA can be given limited time or sources to complete the test. In these situations, ensuring that the project stakeholders understand the risks of inadequate testing is crucial. You can advocate for additional resources or time; if you are unsuccessful, you can prioritise your testing efforts based on the most critical aspects of the product. Communication is vital in these situations.

9. How do you ensure efficient testing in a large-scale enterprise environment?

This QA interview question also can be tricky because the answer depends on many variables. The interviewer will be looking for a candidate who can demonstrate deep knowledge of testing best practices and also provide a strategy that would fit in a large enterprise environment, even if they do not have the necessary experience.

Answer: Using an enterprise testing tool is the best way to ensure efficient and effective testing in a large-scale environment. It allows for:

- Centralised management of testing processes

- Automated test execution

- Comprehensive reporting abilities

- A better streamlining of testing efforts

- Consistent and high-quality results

10. How do you write test cases and make sure they are thorough?

Test cases and how you write them are among the most common QA testing interview questions you might face. A famous professor of software engineering Cem Kaner is known for his popular testing quote, “Tester is only as good as the test cases he can think of.“

This question can be tricky for some candidates because if you do not have a previous test case written for actual software in your experience, you should be theoretically perfect in it to get the correct answer. Before going to an interview, make sure you revisit some actual test case examples that will help you.

Answer: My approach to writing the test cases includes these crucial steps to make sure it is thorough:

- Review the requirements and specifications of the software

- Identify various scenarios (both positive and negative) and create test cases for each requirement

- Prioritise the test cases based on their importance

- Review the cases and their prioritisation with the QA lead/PO to ensure that the test coverage is complete

If you are looking to streamline your testing efforts, the aqua Copilot is the ultimate solution for you. With aqua’s AI assistant, you can automatically create entire test cases from requirements, remove duplicate tests, and prioritise essential tests for maximum impact. aqua also offers unlimited free basic licenses for manual QA, making it accessible to all teams, regardless of size. With expert defect tracking, REST API support, unlimited ticket capacity, and frequent feature updates, aqua has everything you need for a seamless testing experience.

Upgrade your testing game with aqua AI Copilot today

11. Explain the concept of regression testing and its importance in software development.

Answer: Regression testing involves retesting parts of the software that were previously working fine to ensure they still function correctly after changes or new additions. It’s crucial in software development because as we update or add new features, we risk unintentionally breaking existing functionalities. Regression testing acts as a safety net, ensuring the integrity of the software by verifying that the old, functional parts remain intact despite the changes. It helps maintain the quality and reliability of the software throughout its evolution.

12. What role does risk analysis play in test planning, and how do you conduct it effectively?

This is one of the basic QA interview questions intended to gauge the candidate’s understanding of risk management in testing strategies.

Answer: Risk analysis in test planning is pivotal as it helps us identify potential areas prone to defects, guiding focused testing efforts. Effectively conducting risk analysis involves assessing factors like impact, probability, and mitigation strategies for identified risks, ensuring prioritisation aligns with critical areas. Risk analysis provides a structured approach to understanding and managing uncertainties that could affect project objectives. To conduct effective risk analysis, teams typically employ risk matrices, brainstorming sessions, and historical data analysis techniques. This process ensures a comprehensive understanding of potential challenges, allowing project stakeholders to plan and implement mitigation strategies to safeguard project success proactively.

13. Describe the difference between smoke testing and sanity testing. When and how would you perform each?

Answer: Smoke testing examines fundamental software functionalities. It is like ensuring a car’s engine starts before a long drive. It’s executed upfront, validating basic operability to confirm the build’s stability before in-depth testing. In contrast, sanity testing verifies specific features post-modifications, acting like a quick health check after tweaks, ensuring critical functions aren’t compromised. Smoke testing precedes extensive testing phases, while sanity testing follows specific updates to uphold the overall software integrity.

14. What are the advantages and disadvantages of manual and automated testing?

Answer: Manual testing offers flexibility and adaptability for exploratory scenarios, allowing testers to explore the software intuitively. However, it can be time-consuming for repetitive tasks and might lack scalability for larger projects. Automated testing, on the other hand, provides efficiency for repetitive tasks, ensuring consistency and broader test coverage. Yet, it demands a significant initial setup and might not suit scenarios requiring human intuition. An effective testing strategy often balances both approaches to maximise their strengths.

15. How do you handle the situation when a developer disputes a bug you reported?

This QA tester interview question aims to assess conflict resolution and communication skills in a testing context.

Answer: Open communication is key when a developer disputes a reported bug. I respectfully present evidence, including detailed observations or screenshots, fostering a collaborative dialogue. It’s about understanding each other’s perspectives to reach a consensus. Our primary goal is to ensure the software’s quality isn’t compromised, prioritising a solution over individual viewpoints.

When presenting evidence, including detailed observations or screenshots is important as it encourages a collaborative dialogue. As part of aqua cloud, our bug-recording solution Capture greatly enhances this collaborative process. With ‘Captured’ defect reports, you’ll gain clear insights into the reported issues, minimising misunderstandings and facilitating smoother communication. Capture promotes a streamlined bug resolution process, helping you focus on solutions rather than disputes, ultimately contributing to elevated software quality.

Make your bug reports infallible

16. What strategies would you use to ensure proper test coverage in a project with constantly changing requirements?

Answer: I’d adopt agile testing methodologies in a project with evolving requirements, continuously aligning test strategies with changing project needs. Embracing risk-based testing, I’d prioritise testing based on critical features or areas susceptible to change. Close collaboration with stakeholders and frequent feedback loops help ensure updated test coverage aligns with shifting priorities. Additionally, employing automation for regression testing of core functionalities allows swift adaptation to new changes while maintaining quality assurance. Regular reevaluation of test strategies ensures coverage adapts seamlessly to evolving requirements.

17. How do you prioritise test cases when you have limited time for testing?

Answer: Prioritising test cases with limited time needs a strategic approach. I’d begin by categorising test cases based on critical functionalities, high-risk areas, and core user workflows. Employing risk-based testing principles, I’d focus on scenarios with the highest potential impact on end-users or system integrity. Additionally, I’d leverage techniques like boundary value analysis or equivalence partitioning allow for efficient coverage of critical scenarios within time constraints. Prioritisation remains flexible, allowing adjustments based on immediate project needs and continual reassessment of testing priorities.

18. Describe the steps you would take to ensure the reliability and validity of your test data.

Answer: To ensure the reliability and validity of test data, I’d take the following steps:

- Verify Accuracy: Cross-reference data against trusted sources or benchmarks to validate accuracy.

- Sanitise and Anonymise: Ensure sensitive data is sanitised and anonymised to comply with privacy regulations while retaining relevance for testing purposes.

- Maintain Data Consistency: Implement version control measures to maintain data consistency across testing cycles.

- Ensure Relevancy: Regularly update test datasets to ensure relevance and alignment with current testing needs.

- Continuous Monitoring: Validate test data against real-time sources or test environments to ensure ongoing accuracy and reliability.

Tired of the manual grind of creating massive test datasets? Say hello to aqua’s AI Copilot – your personal assistant for generating test data effortlessly. It’s not just smart; it’s genius. Trust it more than you trust your ChatGPT or Google Bard. With aqua’s AI Copilot, testing just got a whole lot smoother. Embrace the future of testing – because who wouldn’t want a sidekick that makes testing a breeze?

Create test data with just a few clicks

19. How do you approach testing for software that requires localisation and internationalisation?

This is one of the QA manager questions that aims to assess the candidate’s familiarity with testing strategies for globalised software.

Answer: Testing software requiring localisation and internationalisation involves understanding specific localisation needs, such as language, cultural nuances, and regional preferences, to define test scopes. It includes testing UI elements, content, date/time formats, and currency conversions to ensure compatibility with targeted locales. Internationalisation testing verifies the software’s adaptability to diverse locales, considering language support, character encoding, and cultural conventions. It also involves assessing functionality across various platforms, devices, and regions to ensure consistent performance. Collaboration with localization experts and stakeholders is crucial to validate and refine testing strategies for optimal global usability.

20. How significant is a traceability matrix in software testing?

Answer: A traceability matrix is important in software testing as it serves as a comprehensive mapping tool, linking requirements to test cases and ensuring complete test coverage. It essentially provides a clear overview, allowing us to trace and validate if each requirement has associated test cases and if those test cases have been executed. This matrix helps identify gaps or redundancies in testing, ensuring that every aspect of the software is adequately validated against its intended functionalities. Moreover, it aids in impact analysis by showcasing which changes affect requirements, streamlining regression testing efforts and minimising risks associated with modifications. The traceability matrix acts as a navigational guide, ensuring alignment between project requirements and test coverage throughout the software development lifecycle.

21. How would you handle a situation where there are incomplete or ambiguous requirements for a project you're testing?

Answer: Encountering incomplete or ambiguous requirements in a project requires a proactive approach. I’d initiate communication with stakeholders or project managers to seek clarification on the unclear aspects. Simultaneously, I’d collaborate closely with the development team to analyze the existing requirements and devise potential scenarios or use cases based on the available information. Employing risk-based testing strategies, I’d prioritise testing efforts on critical functionalities or areas less impacted by ambiguity, aiming to ensure basic functionality while seeking clarification. Throughout this process, I’d meticulously document all discussions, assumptions made, and any decisions taken to maintain transparency and facilitate clear communication among project stakeholders. This collaborative and adaptive approach allows for continuous refinement of test scenarios as requirements evolve or become more defined.

22. Explain the process of test environment setup and its importance in testing.

Answer: Test environment setup involves a lot of steps, including these:

- Requirement Analysis: Understanding the system requirements and identifying the necessary hardware, software, and network configurations for testing.

- Environment Configuration: Setting up hardware infrastructure, installing operating systems, databases, middleware, and necessary software tools to mirror the production environment.

- Data Setup: Populating the test databases with relevant data to simulate real-world scenarios and ensure comprehensive testing coverage.

- Integration: Ensuring seamless integration among various components within the test environment to mirror the actual system architecture.

- Security Implementation: Configuring security measures to replicate production-level security settings and ensure test data confidentiality and integrity.

- Maintenance and Version Control: Regularly updating the test environment with new builds or versions to maintain alignment with the evolving software.

The importance of a well-prepared test environment is in its ability to replicate real-world conditions, allowing testers to conduct accurate and reliable testing. It aids in identifying issues early in the development cycle, minimising risks associated with deploying flawed software into production. Additionally, a properly set-up test environment enables effective regression testing, performance assessment, and validation of system functionalities, contributing to overall software quality and reliability.

23. Describe the difference between positive testing and negative testing. Provide examples.

Answer: Positive testing involves verifying that a system performs as expected with valid inputs, confirming the software functions correctly under normal conditions. Conversely, negative testing checks the system’s behaviour with invalid inputs or unexpected conditions, ensuring it handles errors or exceptional scenarios effectively.

- Positive Testing Example: Suppose a login feature in an application. Positive testing involves entering valid credentials (username and password), confirming successful login, and validating the expected behavior.

- Negative Testing Example: Continuing with the login feature, negative testing tests entering incorrect credentials, like an invalid username or password, to ensure the system responds appropriately, perhaps by displaying an error message or denying access. This validates how the system handles incorrect inputs or unauthorized access attempts.

24. What are the advantages and disadvantages of using exploratory testing over scripted testing?

Answer: Exploratory testing brings flexibility to adapt quickly to changing software requirements and discover unexpected defects that scripted testing might miss. It promotes creative exploration, enabling testers to identify issues while allowing simultaneous learning about the system efficiently. However, its unstructured nature might lead to challenges in detailed documentation, consistency, and ensuring complete coverage of all possible scenarios. Moreover, it heavily relies on testers’ expertise, which could impact consistency within a testing team.

In your first QA interview, it's pretty basic questions like how do you write a test case, how do you determine the priority of a test case, what are important things that go into a bug report, what would you do if documentation doesn't exist and things like this.

25. How do you determine which test cases to automate and which ones to execute manually?

This software quality assurance interview question evaluates the candidate’s decision-making process regarding test automation.

Answer: When deciding between automated and manual testing, I focus on automating repetitive tasks like regression testing or scenarios requiring extensive data sets. These automated tests ensure efficiency and consistency, especially for stable functionalities. For cases needing exploratory or user-focused testing, I opt for manual execution to allow flexibility in exploring various scenarios, catching unforeseen issues, and adapting to evolving requirements. It’s crucial to strike a balance between automation’s benefits, like increased speed and coverage, and the adaptability and intuition that manual testing provides, particularly in dynamic or evolving projects.

26. How do you manage and prioritise defects found during testing, especially in a large-scale project with a lot of issues?

Answer: Managing and prioritising defects in a large-scale project involves meticulous categorisation. I base them on severity, impact on critical functionalities, and potential risks to the end-user experience. I first start by categorising defects into critical, major, and minor, focusing on critical issues affecting core functionalities. Prioritisation considers factors like customer impact, frequency of occurrence, and business-critical features. Close collaboration with stakeholders helps align defect resolution with project goals, ensuring swift resolution of critical issues while balancing ongoing development efforts. Regular reevaluation and adjustment of priorities based on project dynamics are crucial to managing defects in large-scale testing scenarios effectively.

27. Describe the steps you would take to ensure the scalability of your test cases for a growing software system.

Answer: Ensuring test case scalability for a growing software system involves several steps. Initially, I’d adopt a modular approach to test case design, creating reusable components that accommodate new functionalities without extensive modification. Additionally, I’d implement parameterisation in test cases, allowing flexibility to adjust inputs for varied scenarios. Prioritising test coverage based on critical functionalities ensures efficient use of resources. Moreover, investing in automation frameworks that support scalability, like data-driven or keyword-driven testing, aids in efficiently managing an expanding test suite. Regular reviews and updates of test cases alongside software updates maintain alignment with evolving system requirements, ensuring scalable and comprehensive test coverage.

28. Discuss the importance of usability testing and the key factors you consider when conducting such tests.

Answer: Usability testing is crucial for ensuring user-centric software. When conducting such tests, I prioritise factors that directly impact user experience: intuitive navigation, task efficiency, clarity of instructions, and overall satisfaction. Creating realistic user scenarios and observing user interactions allow us to gather valuable feedback on usability. Elements like accessibility, consistent design, and effective error handling are critical considerations during these tests. Early identification of user-related issues empowers us to refine the software, ensuring a positive and seamless user experience.

29. How do you handle a critical bug discovered just before a major release deadline?

This interview question for QA engineers assesses the candidate’s approach to managing high-priority issues in time-sensitive situations.

Answer: Discovering a critical bug before a major release deadline demands a structured approach. Firstly, I’d immediately communicate the issue to all stakeholders, providing a clear and detailed report outlining the impact and potential risks to the release. Simultaneously, I’d collaborate closely with developers to understand the root cause and explore potential quick-fix solutions without compromising software integrity. If a temporary workaround is feasible, I’d propose implementing it while preparing a more comprehensive fix post-release. Prioritising testing efforts on the fix, I’d conduct thorough regression testing, focusing on the impacted areas to ensure the proposed solution’s effectiveness. Additionally, I’d collaborate with project managers to assess the possibility of a slight release delay to accommodate necessary testing and avoid jeopardising software quality. Clear and transparent communication with all stakeholders throughout this process is crucial to managing expectations and ensuring a collective understanding of the situation and its potential resolutions. Ultimately, the goal is to resolve the critical bug while balancing delivering on time and ensuring the software’s stability and quality.

30. Explain the concept of performance testing and discuss different types of performance tests.

Performance testing evaluates a system’s behaviour under different workloads and conditions, ensuring it meets performance benchmarks. There are various performance tests:

- Load Testing: Assesses system behaviour under anticipated load levels to ensure it handles expected user traffic without performance degradation.

- Stress Testing: Pushes the system beyond its normal capacity to identify breaking points or failure thresholds under extreme conditions.

- Endurance Testing: Evaluates system performance over an extended period to ensure sustained functionality without degradation.

- Scalability Testing: Measures the system’s ability to scale up or down by increasing or decreasing resources while maintaining performance.

- Spike Testing: Assesses system response to sudden, large spikes or fluctuations in user activity to ensure stability during such events.

Each type of performance test addresses specific aspects of system behaviour under different scenarios, ensuring optimal performance across various conditions.

31. What are your strategies for ensuring effective project communication between testers and developers?

Answer: I prioritise effective communication by organising regular meetings where testers and developers discuss project progress, challenges, and upcoming tasks. Clear and concise documentation, such as test plans and bug reports, is maintained for easy accessibility by all team members. Additionally, I advocate for collaborative tools and issue-tracking systems that facilitate interaction, feedback sharing, and progress tracking between testers and developers. Providing constructive feedback on discovered issues fosters productive dialogues and quicker resolutions. I also encourage cross-team training sessions to enhance mutual understanding of roles and challenges, creating a culture of open communication and collaboration within the team.

Ready to supercharge your collaboration game? aqua and Capture are your dynamic duo. aqua fosters seamless interaction with its collaborative features and issue-tracking systems, making feedback sharing and progress tracking a breeze for testers and developers. Meanwhile, Capture adds visual magic, providing a shared language for spotting issues. It brings transparency to the bug-reporting process, as you can see in the video proof of the exact bug that should be dealt with. Imagine a world where collaboration flows effortlessly, and issues are resolved in the blink of an eye. Dive into a new era of teamwork – aqua and Capture, your partners in collaboration excellence!

Elevate your collaboration to maximum levels

32. Discuss the challenges and best practices in testing for mobile applications across various platforms and OS versions.

Answer: Testing mobile applications across different platforms and OS versions brings several challenges. Firstly, ensuring compatibility across various devices with different screen sizes, resolutions, and hardware specifications requires meticulous testing. Additionally, addressing fragmentation in OS versions and device types demands a comprehensive test strategy covering various combinations. To overcome these challenges, employing cloud-based testing services or emulators efficiently allows multiple platform testing. Implementing robust test automation frameworks that support cross-platform testing aids in achieving broader coverage. Moreover, prioritising tests based on market share and user preferences helps allocate testing efforts effectively. Staying updated with the latest trends, tools, and guidelines specific to mobile app testing is crucial for maintaining high-quality standards across diverse mobile ecosystems.

33. How do you ensure your testing process complies with industry standards and best practices?

Answer: Ensuring compliance with industry standards and best practices in our testing process involves several key strategies. Firstly, I stay updated with the latest industry trends, guidelines, and standards, such as those from ISTQB or IEEE, ensuring our practices align with established benchmarks. Regular reviews of our testing methodologies against these standards help identify areas for improvement. Additionally, I actively participate in relevant training sessions or workshops to incorporate emerging practices into our workflows. Implementing robust documentation practices and conducting periodic audits to validate adherence to these standards further ensures compliance.

34. Describe a situation where you had to make a quick decision during testing due to time constraints. How did you handle it?

Answer: In a time-constrained scenario, I encountered an unexpected issue during regression testing just before a critical release deadline. With limited time available, I swiftly analysed the severity and impact of the issue on core functionalities. Understanding the urgency, I prioritised the affected test cases based on their criticality to the system’s stability and essential user pathways. Communicating the situation promptly to the team, I proposed a risk-based approach, focusing on critical test scenarios while deferring less impactful ones. This allowed us to ensure that the most crucial functionalities were thoroughly tested within the available time frame, minimising the risk of critical issues in the release. I also documented the deferred test cases for future attention to maintain comprehensive testing standards once the immediate deadline pressure had passed.

35. Explain the difference between black-box testing and white-box testing. When would you use each approach?

Answer: Black-box testing involves evaluating software functionality without examining its internal structure. It focuses on validating system behaviour against specified requirements, making it suitable for scenarios where understanding code details isn’t necessary, such as when testing user acceptance testing or third-party software. On the other hand, white-box testing involves inspecting the internal code structure to validate its correctness and coverage. It is ideal for testing intricate algorithms or critical system components where detailed code examination is crucial, like unit testing or code coverage analysis. The choice between these approaches depends on the testing objectives, project requirements, and the level of access to the software’s internal components.

36. What are your thoughts on integrating AI/ML technologies in software testing?

Answer: I believe integrating AI/ML in software testing presents exciting opportunities. These technologies can enhance test automation by intelligently identifying patterns, predicting potential issues, and generating test cases based on learned behaviours. AI-driven tools can analyse vast datasets to identify anomalies or optimise test coverage, significantly reducing manual effort and improving test efficiency. However, while leveraging AI/ML, it’s crucial to ensure transparent and explainable algorithms, validate the accuracy of AI-generated test cases, and maintain human oversight to prevent bias or incorrect assumptions. Overall, embracing AI/ML technologies in testing can revolutionise efficiency and accuracy, but it’s essential to balance automation and human intervention for optimal results.

Talking of AI-generated test cases, there is a solution that will turn your whole process into something effortless in seconds. We are talking about aqua cloud – a solution that makes testing faster and smarter than ever. Picture this: You open a requirement, and aqua immediately auto-creates an entire test case covering it. No more manual headaches. Just plain words turning into full test steps like magic. But here’s the kicker – aqua’s AI is about more than just speed; it’s about prioritising what matters. Auto-prioritise your test cases, ensuring your critical tests take the spotlight before deployment. Embrace the future of testing with aqua’s AI – and have a testing wizard within your team.

Master your test case creation game

37. How do you ensure efficient testing in a large-scale enterprise environment?

Answer: Effective test environment management in a large-scale enterprise involves a multifaceted approach. Firstly, establishing clear communication channels and robust collaboration among cross-functional teams is crucial. Implementing a centralised test management system helps in orchestrating testing efforts across diverse projects and teams. Prioritising test automation for repetitive and critical test scenarios optimises resource utilisation and accelerates testing cycles. Additionally, defining comprehensive test strategies tailored to the enterprise’s needs ensures consistent quality across different products or departments. Regular monitoring of key metrics, such as test coverage and defect density, helps identify bottlenecks and improvement areas. Lastly, fostering a culture of continuous learning and adaptation to evolving technologies ensures the testing process remains agile and responsive to enterprise-scale challenges.

38. How can Artificial Intelligence enhance software testing processes, specifically in test case generation or execution?

Answer: AI presents significant advancements in software testing, particularly in test case generation and execution. AI-powered testing tools can analyse vast datasets, historical test results, and application behaviour to generate diverse and effective test cases autonomously. These tools leverage machine learning algorithms to identify patterns, anomalies, and risk areas, optimising test coverage and efficiency. Moreover, AI-driven test execution can intelligently prioritise and schedule test runs, accelerating the testing process while focusing on critical areas prone to failure. However, while AI enhances automation and efficiency, human oversight remains essential to validate AI-generated test cases, ensuring accuracy and relevance to evolving software functionalities. Overall, AI holds immense promise in revolutionising test case generation and execution by significantly reducing manual effort and enhancing overall test coverage and effectiveness.

39. What strategies would you employ to handle evolving or changing requirements in a software testing project?

Answer: I would employ several key strategies to handle evolving or changing requirements in a software testing project. Establishing a robust communication channel with stakeholders and development teams allows for continuous updates and clarifications on evolving requirements. This ensures that the testing efforts align with the latest project objectives. Secondly, I prioritise flexibility in testing plans, allowing for adaptable methodologies like Agile or iterative testing approaches. Embracing incremental testing allows for frequent adjustments to accommodate changing requirements. Thirdly, I emphasise comprehensive documentation and traceability to track changes, ensuring test cases align with evolving requirements. Moreover, regular reviews and validations with stakeholders help validate and refine testing strategies based on changing needs. Lastly, maintaining a mindset of agility and adaptability within the testing team allows for proactive adjustments to meet evolving requirements efficiently.

40. How do you determine the test coverage in a complex software system, and what factors do you consider when assessing it?

Answer: Determining test coverage in a complex software system needs a complex approach. Firstly, I carefully analyse the project’s requirements and specifications, identifying critical functionalities, business workflows, and potential risk areas. Secondly, I categorise test coverage into various dimensions: functional, non-functional, code coverage, and risk-based coverage. Each dimension focuses on different aspects, ensuring a holistic approach to testing. Thirdly, I consider factors such as business impact, customer usage patterns, historical defect data, and regulatory compliance to prioritise testing efforts. I employ techniques like equivalence partitioning and boundary value analysis to maximise coverage while minimising redundant test cases. Continuous evaluation and adaptation of test coverage based on evolving project needs and feedback ensure comprehensive coverage in the dynamic software development landscape.

Conclusion

Preparing for a job interview can be daunting, but armed with the right questions and strategies, you can confidently approach it. The 40 interview questions outlined in this article provide a comprehensive guide to help you anticipate and navigate the most common inquiries across various industries and roles. Remember to tailor your responses to effectively showcase your unique skills, experiences, and qualifications. Additionally, don’t forget the importance of practising your answers and refining your interview techniques to ensure you make a lasting impression on potential employers. With thorough preparation and a positive mindset, you can ace your next job interview and take a significant step towards achieving your career goals.